Updated November, 2023

In

my

career as a research leader for the US Government, I have

pursued a range of research in mathematical modeling of

complex information systems, data science, and cybernetic

philosophy; with applications in reliability analysis,

computational biology, information warfare, cyber analytics,

infrastructure protection, law enforcement, and distributed

ledger technology. Below

I detail my research areas, including all published papers.

| Computational Topology

and Hypergraph Analytics |

Knowledge-Informed

Machine Learning and Neurosymbolic Computing |

| Applied

Lattice

Theory, Formal Concept Analysis, and Interval

Computation |

Generalized Information Theory,

Uncertainty Quantification, and Possibilistic

Information Theory |

| Computational and

Theoretical Biology, Bio-Ontologies, and Ontological

Protein Function Annotation |

Semantic Technology,

Ontology Metrics, and Knowledge Representation |

| Relational Data Modeling and

Discrete Systems |

Cybernetic Philosophy,

Computational Semiotics, and Evolutionary Systems

Theory |

| Distributed Ledger

Technology: Blockchain, Cryptocurrency, and Smart

Contracts |

High Performance and Semantic

Graph Database Analytics |

| Agent-Based and Discrete Event

Modeling |

Cyber Analytics |

Book

Reviews and Other Works

|

Decision Support and

Reliability Analysis |

Principia

Cybernetica:

Distributed Development of a Cybernetic Philosophy

|

HyperNetX (HNX):

Hypergraph Analytics for Python

|

Research Areas

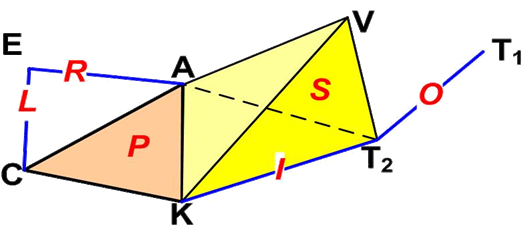

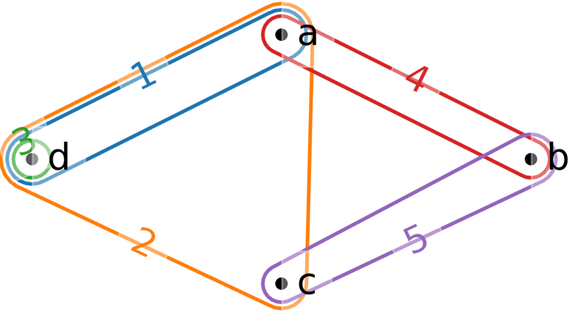

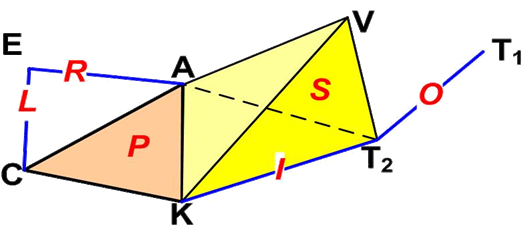

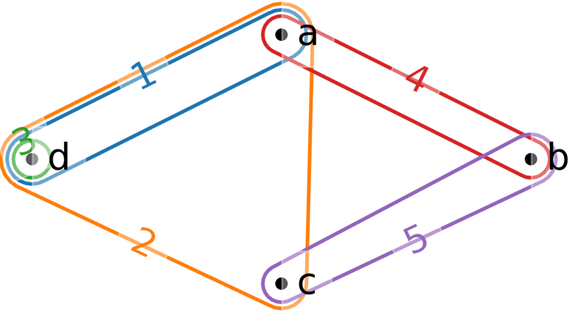

Complex systems are essentially

characterized by producing high

dimensional data, which admit to a variety of  mathematical interpretations. Hypergraphs are natural high

dimensional generalizations of graphs which can attend to high

order interactions amongst entities. Our Python package HyperNetX supports

"hypernetwork science" extensions to network science methods like

centrality, connectivity, and clustering. As finite set systems, hypergraphs are

closely related to other structures like abstract simplicial complexes,

all of which are interpretable as finite orders and finite topological spaces. Such topological

objects admit to homological analysis to identify overall shape

and structure, the most prominent method for these goals being the

persistent homology of Topological Data Analysis (TDA). When

such topological structures as hypergraphs are equipped

mathematical interpretations. Hypergraphs are natural high

dimensional generalizations of graphs which can attend to high

order interactions amongst entities. Our Python package HyperNetX supports

"hypernetwork science" extensions to network science methods like

centrality, connectivity, and clustering. As finite set systems, hypergraphs are

closely related to other structures like abstract simplicial complexes,

all of which are interpretable as finite orders and finite topological spaces. Such topological

objects admit to homological analysis to identify overall shape

and structure, the most prominent method for these goals being the

persistent homology of Topological Data Analysis (TDA). When

such topological structures as hypergraphs are equipped  with data (either simple weights or more complex

data types) and a logic of how data interact, then topological sheaves can model

constraints across dimensional levels, facilitating canonical and

provably necessary methods for heterogeneous information

integration, assessing global consistency of information sources

from local interactions.

with data (either simple weights or more complex

data types) and a logic of how data interact, then topological sheaves can model

constraints across dimensional levels, facilitating canonical and

provably necessary methods for heterogeneous information

integration, assessing global consistency of information sources

from local interactions.

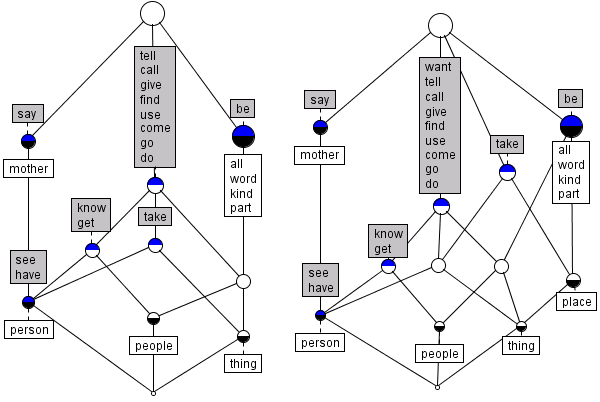

Hierarchy

is an inherent systems principle and concept, and is necessarily

endemic in complex systems of all types. Mathematically, hierarchy

is modeled by partial orders

and lattices, so order theory generally is a

central concern for complex systems. There are deep relations

between orders, semantic information, and relational data

structures, as reflected in semantic hierarchies and formal concept analysis. As

hierarchies are systems admitting to descriptions in terms

of levels, interval representations are also a deeply related

concept. Finally, in a finite context appropriate for data

science, hypergraphs benefit greatly from being represented as set

systems ordered by inclusion, and perhaps even more importantly,

orders and topological spaces are equivalent, so that

computational topology in particular is deeply wedded to lattice

theory.

Hierarchy

is an inherent systems principle and concept, and is necessarily

endemic in complex systems of all types. Mathematically, hierarchy

is modeled by partial orders

and lattices, so order theory generally is a

central concern for complex systems. There are deep relations

between orders, semantic information, and relational data

structures, as reflected in semantic hierarchies and formal concept analysis. As

hierarchies are systems admitting to descriptions in terms

of levels, interval representations are also a deeply related

concept. Finally, in a finite context appropriate for data

science, hypergraphs benefit greatly from being represented as set

systems ordered by inclusion, and perhaps even more importantly,

orders and topological spaces are equivalent, so that

computational topology in particular is deeply wedded to lattice

theory.

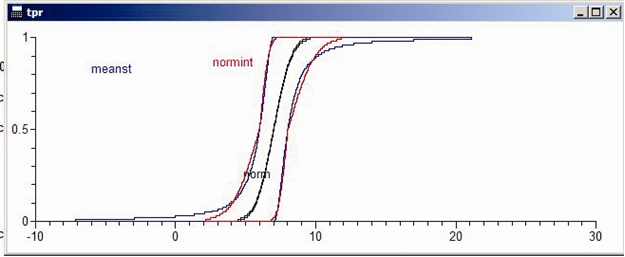

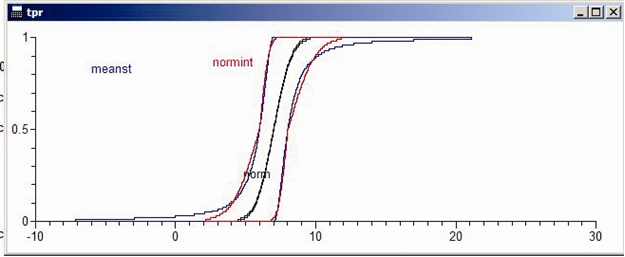

Any effort to model complex information systems must depend on a

solid understanding of the mathematical foundations of information, and its

conceptual sibling uncertainty.

While for decades Shannon and Weaver's statistical entropy has provided the classical

grounding of information theory, a broader range of mathematical

methods is also available to provide needed generalization and

richness. Careful relaxation of key axioms provides a range of

non-monotonic measures representing a diverse collection of

uncertainty semantics, extending beyond probability, randomness,

and likelihood to include belief, possibility, precision,

necessity, vagueness, nonspecificity, and plausibility. Sub-fields

like monotone measures, Dempster-Shafer

evidence theory, and fuzzy

systems are the mathematical grounds for these

approaches. My thesis established possibilistic systems theory as

a generalization of stochastic methods based on empirical random sets, grounding

possibilistic systems in measured random intervals. Applications include decision

support systems and the simulations of large engineering and

infrastructure systems.

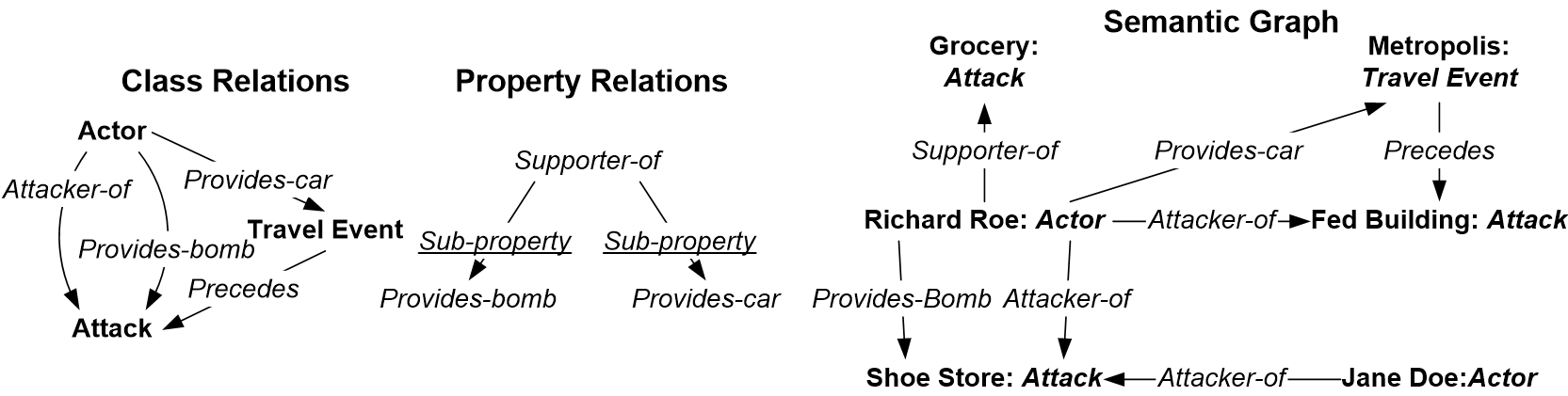

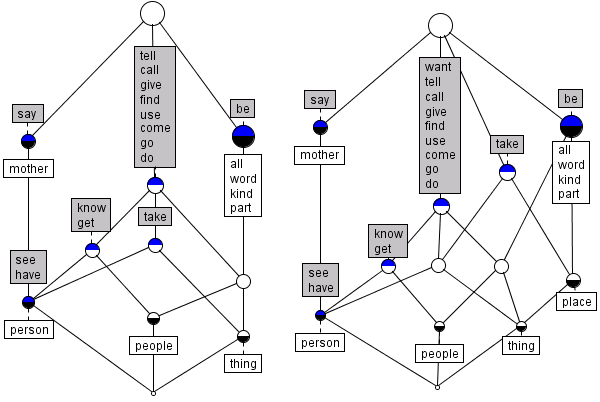

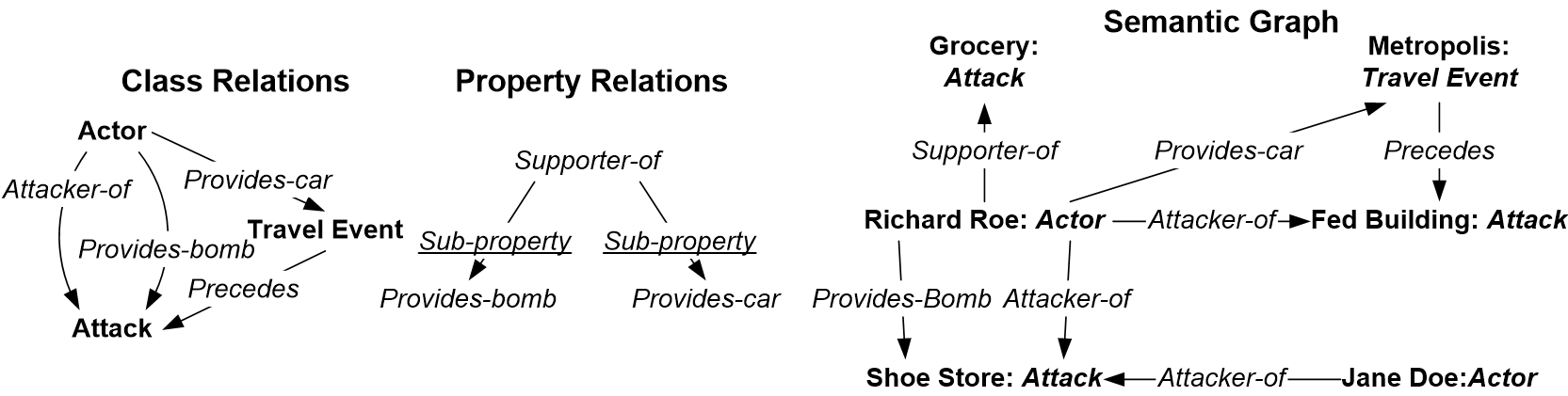

"Information" in

the sense of information theory is quantitative. While critical, this aspect is not

concerned with semantics,

or what the information can mean,

or how it can

"Information" in

the sense of information theory is quantitative. While critical, this aspect is not

concerned with semantics,

or what the information can mean,

or how it can  be

interpreted.

Understanding semantic or symbolic

information is a philosophically challenging

consideration, closely related to computational linguistics and semiotics. Semantic technology has arisen in the

context of Artificial Intelligence to formally represent levels of

meaning and reference in systems. Semantic processing, in

this sense, requires meta-level coding of information tokens in

terms of their semantic types, as formalized in semantic

hierarchies or ontologies.

Instance statements are predicates in the language of these

semantic types, and are typically represented in ontology-labeled semantic graphs. Formal

ontologies are complex objects which greatly benefit from

mathematical analysis and formal representation. My work in

approaches for modeling ontologies as lattices, and semantic

graphs as ontologically-labeled directed hypergraphs, have proven

very valuable in their management and analysis, for tasks like

ontology alignment and ontological annotation of information

sources.

be

interpreted.

Understanding semantic or symbolic

information is a philosophically challenging

consideration, closely related to computational linguistics and semiotics. Semantic technology has arisen in the

context of Artificial Intelligence to formally represent levels of

meaning and reference in systems. Semantic processing, in

this sense, requires meta-level coding of information tokens in

terms of their semantic types, as formalized in semantic

hierarchies or ontologies.

Instance statements are predicates in the language of these

semantic types, and are typically represented in ontology-labeled semantic graphs. Formal

ontologies are complex objects which greatly benefit from

mathematical analysis and formal representation. My work in

approaches for modeling ontologies as lattices, and semantic

graphs as ontologically-labeled directed hypergraphs, have proven

very valuable in their management and analysis, for tasks like

ontology alignment and ontological annotation of information

sources.

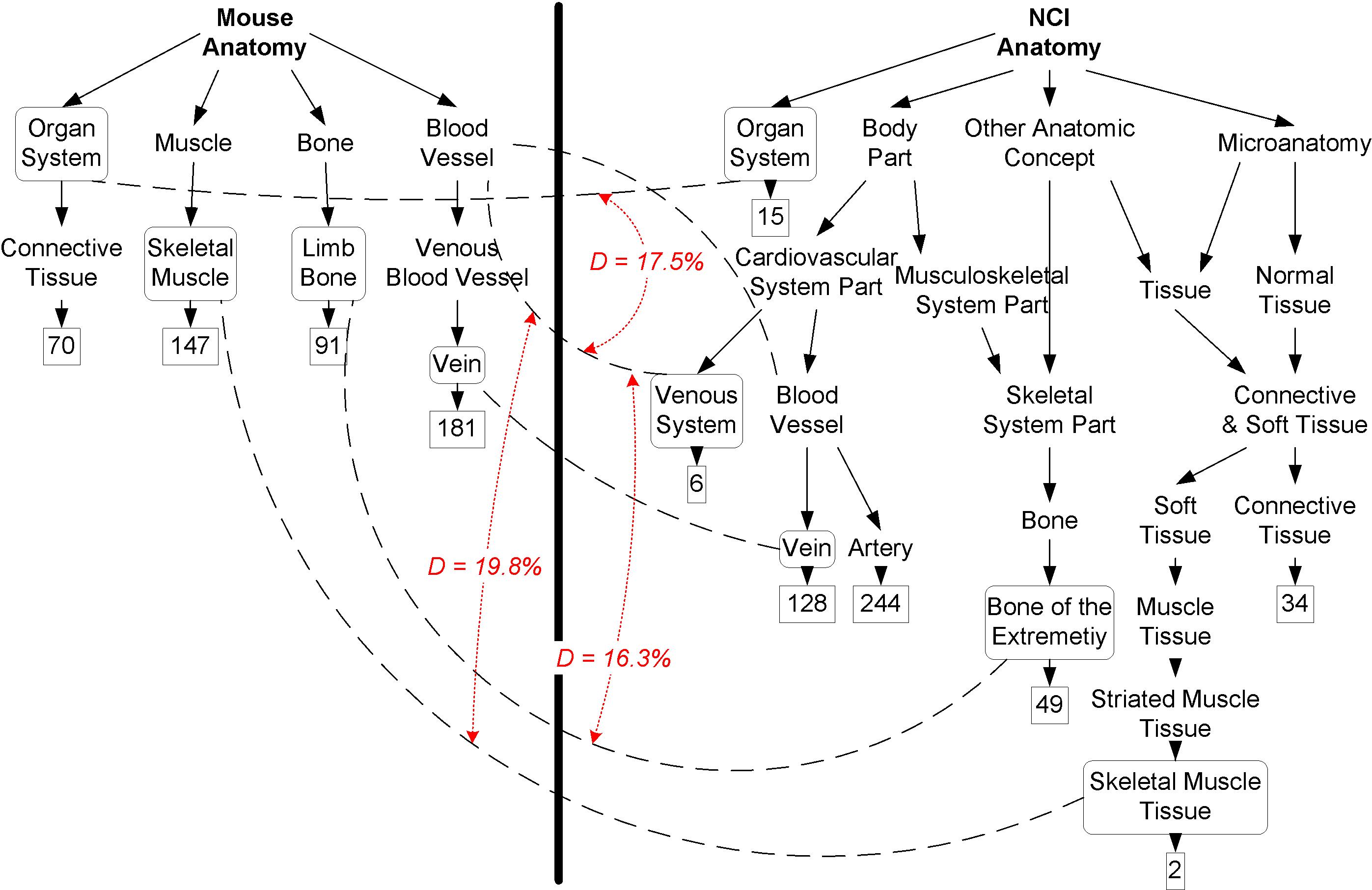

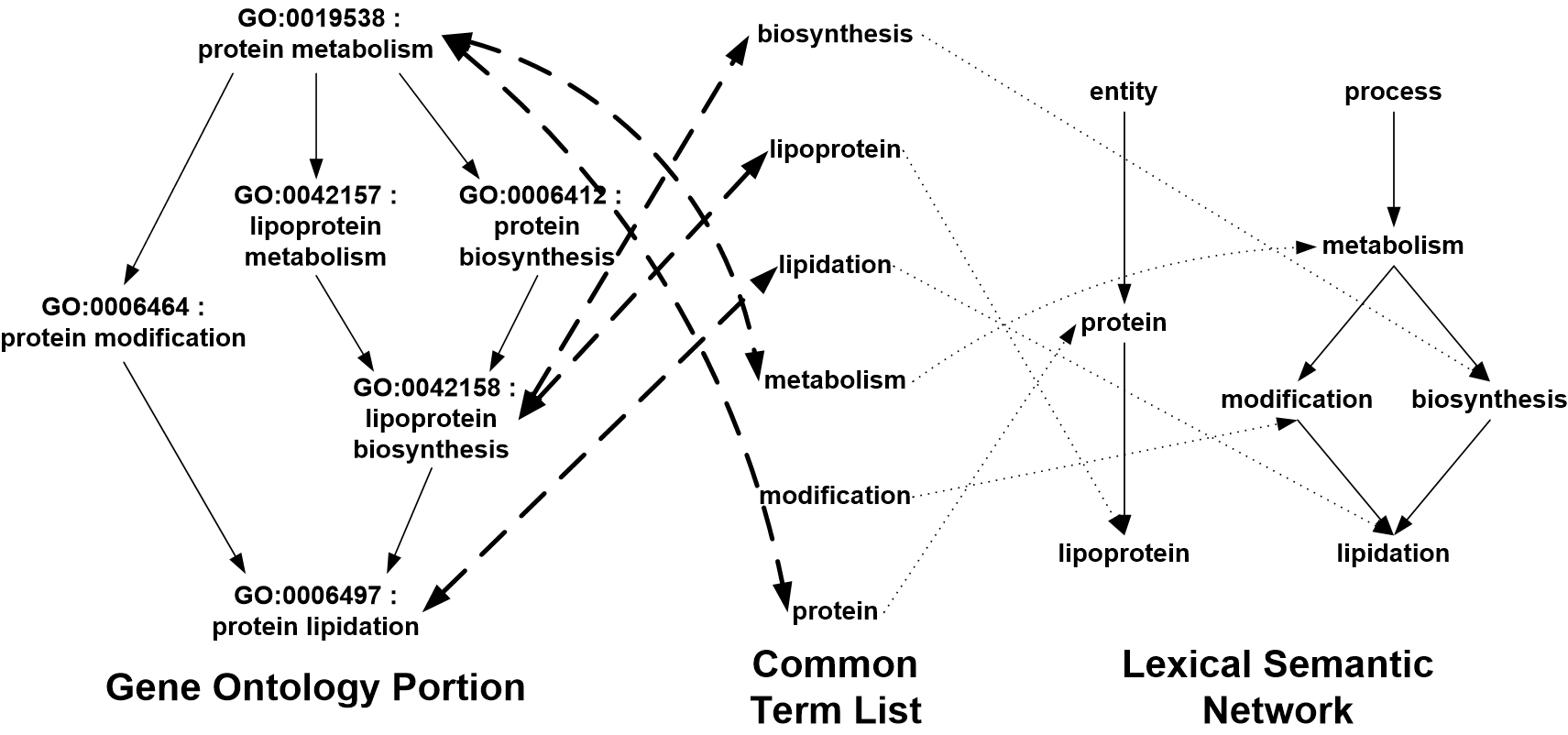

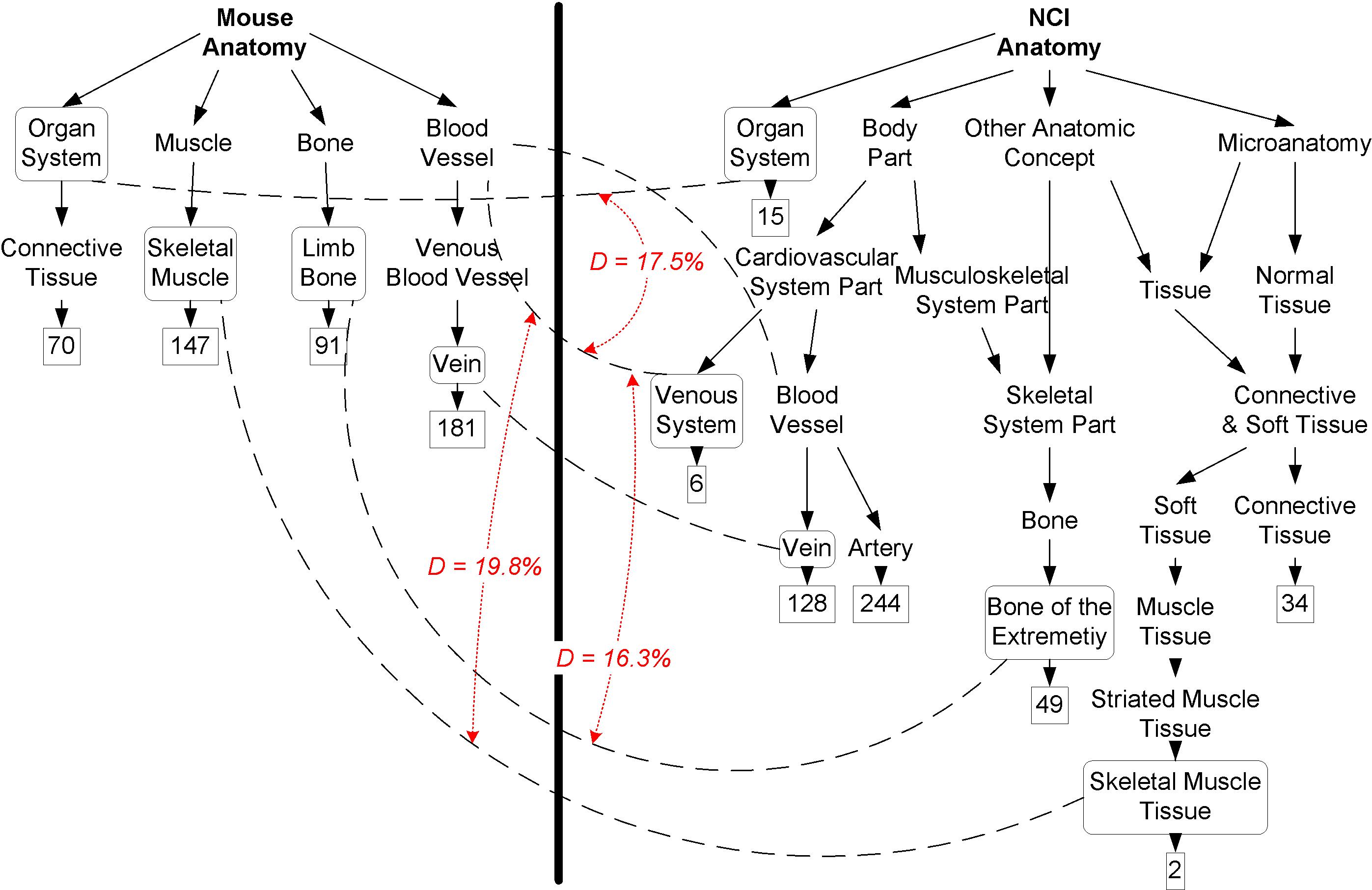

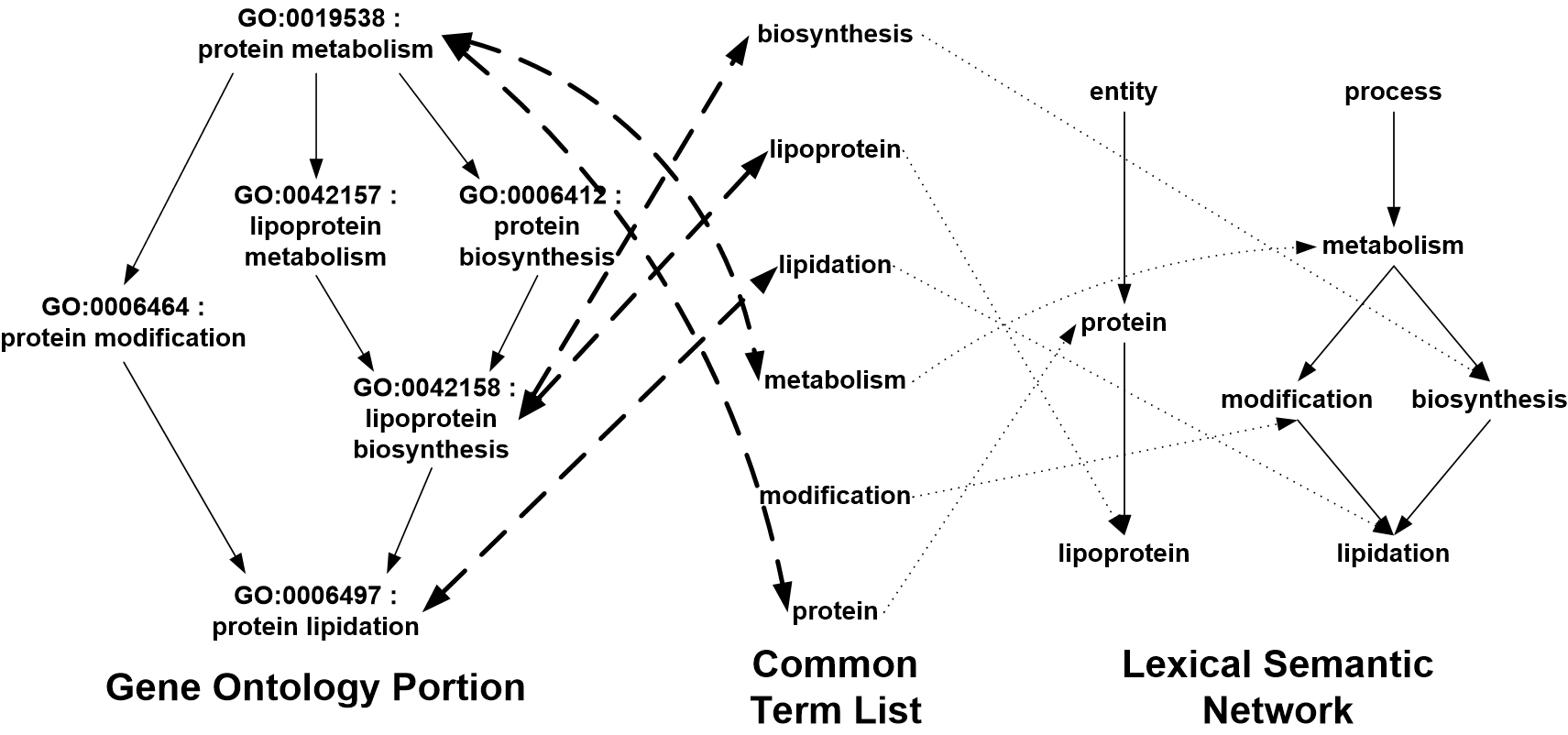

When considering

the range of complex information systems, biological systems stand

out as the paradigm and the epitome. Organisms encompass a vast

collection of numbers and types of very specifically interacting

entities, supporting energetic regulation and control across a

range of hierarchically organized levels, and encompassing the

first appearance of information processing in evolutionary

history, manifesting self-replication and the open-ended

evolutionary of increasing complexity. The genomic revolution of

the early 21st century then provided the basis for the

computational analysis and modeling of this complexity,

interacting with semantic technologies through the bio-ontology and systems biology movements. My

work here has first focused on the mathematical analysis of

bio-ontologies, especially for automated

ontological protein function prediction; and then more

recently on hypergraph analytics for multi-omic studies.

When considering

the range of complex information systems, biological systems stand

out as the paradigm and the epitome. Organisms encompass a vast

collection of numbers and types of very specifically interacting

entities, supporting energetic regulation and control across a

range of hierarchically organized levels, and encompassing the

first appearance of information processing in evolutionary

history, manifesting self-replication and the open-ended

evolutionary of increasing complexity. The genomic revolution of

the early 21st century then provided the basis for the

computational analysis and modeling of this complexity,

interacting with semantic technologies through the bio-ontology and systems biology movements. My

work here has first focused on the mathematical analysis of

bio-ontologies, especially for automated

ontological protein function prediction; and then more

recently on hypergraph analytics for multi-omic studies.

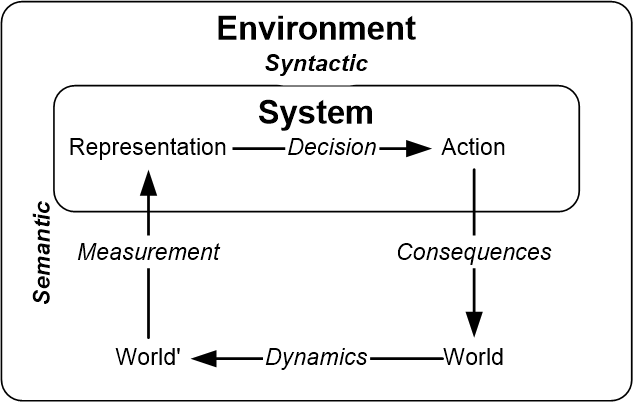

Systems theory, or systems science, is the transdisciplinary study of

the abstract organization of phenomena, independent of their

substance, type, or spatial or temporal scale of existence. As a

post-war intellectual movement, it coupled closely with cybernetics as the science of

control and communication in systems of all types. Together,

systems science and cybernetics provided the first "science of

complexity", and laid the groundwork for a range of future

developments in computer science and mathematics, including

complex adaptive systems, evolutionary systems, artificial

intelligence, and artificial life. As fundamentally concerned with

the nature of information in systems, cybernetics is thus also

deeply involved with all aspects of semiotics as the science of

signs and symbols and their interpretation, especially in domains

beyond the cultural level. This includes biological semiotics as the

foundation of biology, and computational

semiotics as the foundation for AI. As a lifelong student

of systems and cybernetics, and especially its mathematical and

philosophical foundations, I have advanced core theories in

fundamental hierarchy theory and complexity science, including

evolutionary systems. I also co-founded Principia

Cybernetica, a pioneering site from the dawn of the Internet

age to develop a complete cybernetic philosophy and encyclopedia

supported by collaborative computer technologies.

Systems theory, or systems science, is the transdisciplinary study of

the abstract organization of phenomena, independent of their

substance, type, or spatial or temporal scale of existence. As a

post-war intellectual movement, it coupled closely with cybernetics as the science of

control and communication in systems of all types. Together,

systems science and cybernetics provided the first "science of

complexity", and laid the groundwork for a range of future

developments in computer science and mathematics, including

complex adaptive systems, evolutionary systems, artificial

intelligence, and artificial life. As fundamentally concerned with

the nature of information in systems, cybernetics is thus also

deeply involved with all aspects of semiotics as the science of

signs and symbols and their interpretation, especially in domains

beyond the cultural level. This includes biological semiotics as the

foundation of biology, and computational

semiotics as the foundation for AI. As a lifelong student

of systems and cybernetics, and especially its mathematical and

philosophical foundations, I have advanced core theories in

fundamental hierarchy theory and complexity science, including

evolutionary systems. I also co-founded Principia

Cybernetica, a pioneering site from the dawn of the Internet

age to develop a complete cybernetic philosophy and encyclopedia

supported by collaborative computer technologies.

While complex systems representations can take many forms, and

complexity has many aspects, there is a canonical structure for

them from discrete mathematics, which we can call a multi-relational system. These

can be thought of as data

tensors, basically, a collection of N dimensions of data, likely

of different types, representable in an N-fold space, table, or or multi-dimensional arrays.

Additionally, these dimensions or variables can have complex

interactions, also thought of as dependencies or constraints. And

where the data in each dimension may be totally explicated in

detail, it is also common for there to be statistical

distributions on dimensions instead. This overall mathematical

structure yields a variety of special cases of interest, including

graphs and hypergraphs. Also prominent are multivariate

statistical and graphical models

like Bayes nets, as well

as OnLine Analytical Processing

(OLAP) systems for handling complex relational data. My

work has focused on the use of discrete mathematical tools for

analyzing these multirelational systems, including tensors and

lattices of set partitions and covers.

Hypergraph- and graph-structured data are increasingly prominent

in massive data applications. Computational semantic systems are

typically depoyed within the specific technologies of the Semantic Web paradigm,

including the Resource Description Framework (RDF) for instance

information, the Web Ontology Language (OWL) for ontological

typing information, and SPARQL as a query language. The result is

a semantic graph database (SGDB)

or a property graph database as

a graph-theoretical analog to a relational database, typically

engineered as triple stores.

SGDBs are being developed to massively scale, requiring new

engineering models. In particular, "irregular memory" data objects

like graphs and other combinatoric data structures require

alternate memory models for high performance scaling. We have

pursued a range of methods in a variety of HPC environments,

including the Cray XMP and Cray's Chapel language, around both

semantic and property graphs, and hypergraph data models at high

scale.

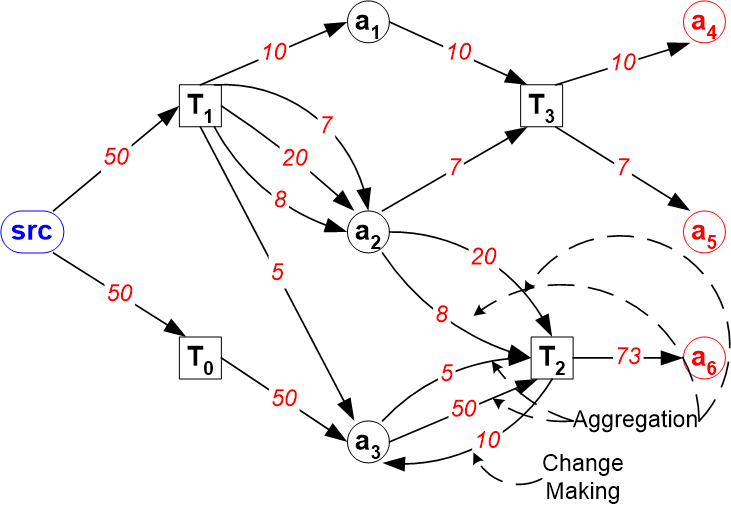

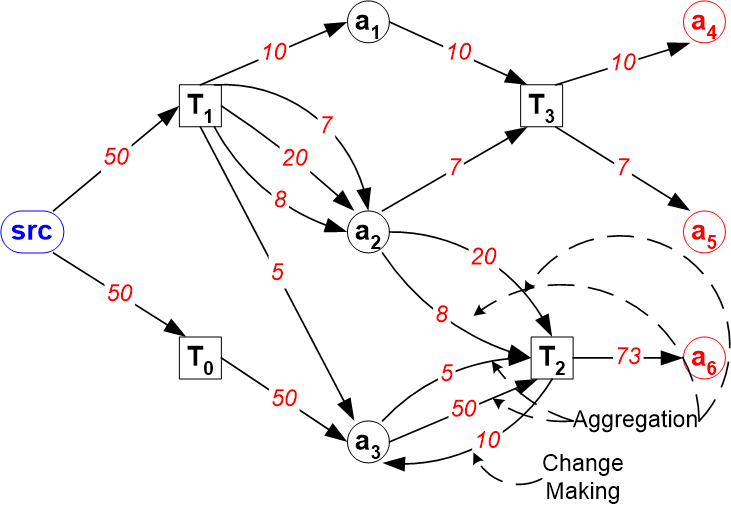

Distributed cryptographic systems are

revolutionizing the way that digital work and workflows are

organized, promising a broad and sustained impact on the entire

fabric of the digital space and information flows. While in the

world of blockahin-enabled

distributed ledgers, cryptocurrencies like Bitcoin

remain the dominant technology and application, so-called "smart

contract" systems can provide a general, cryptographically secure,

general distribtued computing environment broadly capable of

securely automating general workflows. Cryptocurrency transaction

networks naturally take the form of directed hypergraphs, and we

have modeled exchange patterns through directed hypgraph motifs.

We are also pioneering applications of novel smart contract

systems for nuclear safeguards and export control systems.

Distributed cryptographic systems are

revolutionizing the way that digital work and workflows are

organized, promising a broad and sustained impact on the entire

fabric of the digital space and information flows. While in the

world of blockahin-enabled

distributed ledgers, cryptocurrencies like Bitcoin

remain the dominant technology and application, so-called "smart

contract" systems can provide a general, cryptographically secure,

general distribtued computing environment broadly capable of

securely automating general workflows. Cryptocurrency transaction

networks naturally take the form of directed hypergraphs, and we

have modeled exchange patterns through directed hypgraph motifs.

We are also pioneering applications of novel smart contract

systems for nuclear safeguards and export control systems.

Along with organisms, cyber systems are another prime example of

complex information systems. Our work brings a range of discrete

mathematical techniques, including hypergraphs, computational

topology, and interval and distributional analysis, to the study

of cyber data, including Netflow, DNS, and malware catalogs.

Agent-Based and Discrete Event Modeling (papers)

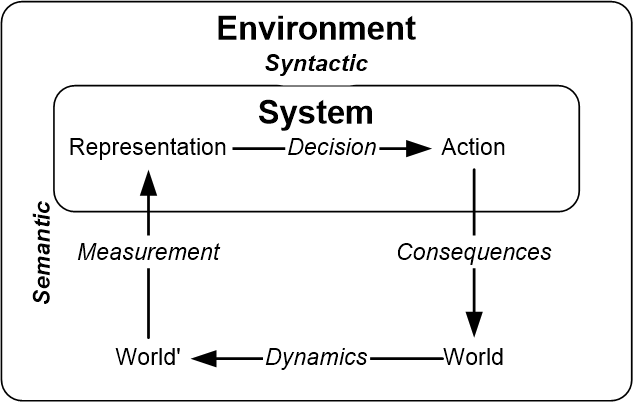

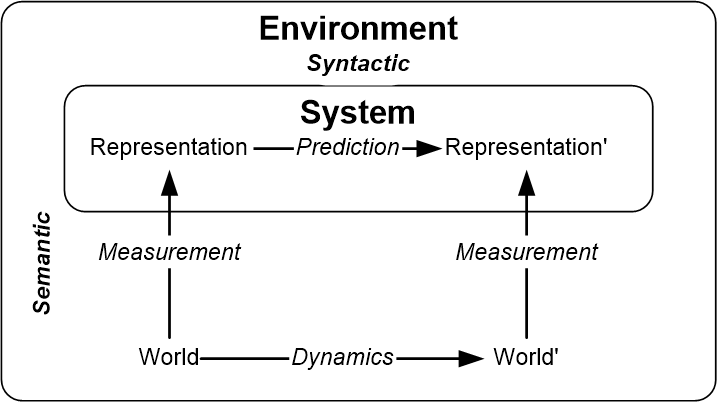

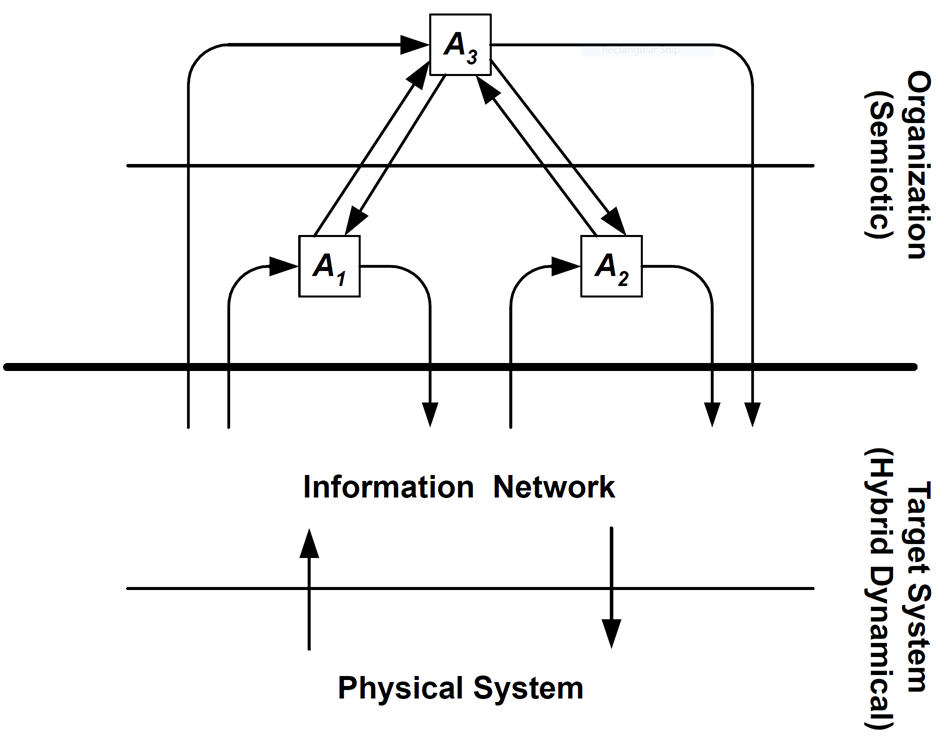

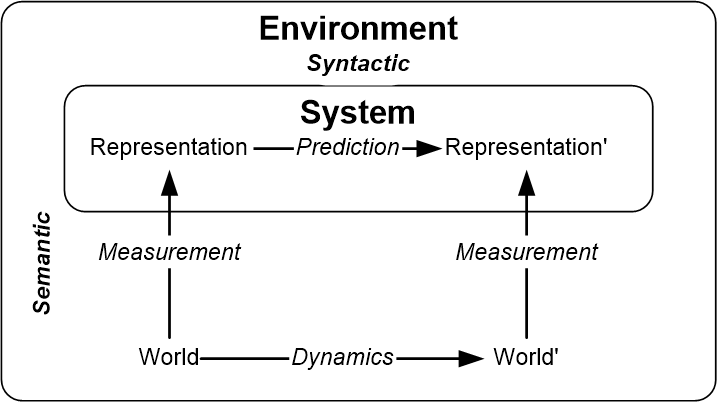

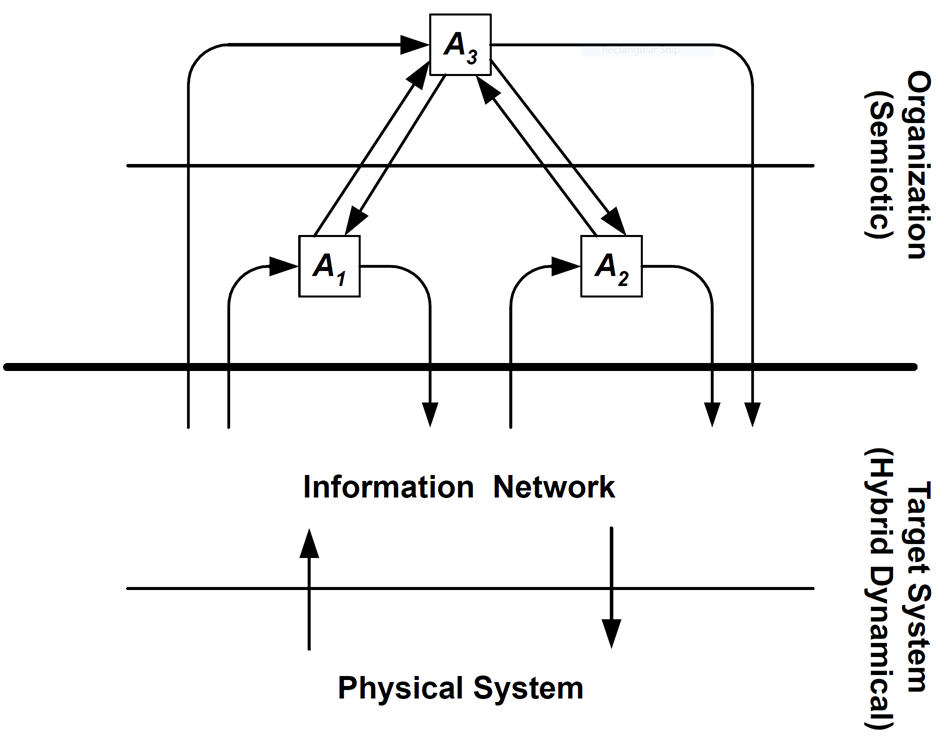

Systems theory can

be seen as fundamentally concerned with two concepts: 1) the

nature of models, the mathematical relationships between classes

of models, and how models can be transformed amongst those

classes; and 2) the nature of agents as autonomous entities

interacting with the world and each other to effect control

relations for their survival. The concept of the semiotic agent joins these

concepts, in the sense of an autonomous model-based control

system, equipped, recurrently, with models of other, interacting,

model-based control systems. In that context, my work has explored

the role of possibilistic automata within the abstract universal

modeling Discrete EVent Systems

(DEVS) formalism; as well as studying the use of

semiotic agent models in socio-technical organizations.

Systems theory can

be seen as fundamentally concerned with two concepts: 1) the

nature of models, the mathematical relationships between classes

of models, and how models can be transformed amongst those

classes; and 2) the nature of agents as autonomous entities

interacting with the world and each other to effect control

relations for their survival. The concept of the semiotic agent joins these

concepts, in the sense of an autonomous model-based control

system, equipped, recurrently, with models of other, interacting,

model-based control systems. In that context, my work has explored

the role of possibilistic automata within the abstract universal

modeling Discrete EVent Systems

(DEVS) formalism; as well as studying the use of

semiotic agent models in socio-technical organizations.

One of the great values of a systems approach to data science is

its ability to flexibly apply a diverse collection of methods to a

range of applications to aid analysts and decision makers. In that

context, I have worked to bring generalized uncertainty

quantification and information methods, including Dempster-Shafer

evidence theory and possibilistic systems theory, to applications

in engineering modeling and reliability analysis, decision support

for critical infrastructure, and model-based diagnostics.

Research Works

Computational Topology and Hypergraph

Analytics

-

Praggastis,

Brenda; Aksoy, Sinan; Arendt, Dustin; Bonicillo, M;

Joslyn, Cliff; Purvine, Emilie; Shapiro, Madelyn; Yun,

Ji-Young: (2023) "HyperNetX: A Python

Package for Modeling Complex Network Data as Hypergraphs",

J Open Source Software, https://arxiv.org/abs/2310.116

26, (in review)

- Rawson, Michael G; Myers, Audun;

Green, Robert; Robinson, M; Joslyn, Cliff: (2023) "Formal Concept Lattice

Representations and Algorithms for Hypergraphs", https://arxiv.org/abs/2307.116

81

- Colby, Sean; Shapiro, Madelyn; Bilbao,

Aivett; Broeckling, C; Lin, Andy; Purvine, Emilie; Joslyn,

Cliff A: (2023) "Introducing

Molecular Hypernetworks for Discovery in Multidimensional

Metabolomics Data", J Proteome Research, https://www.biorxiv.org/content/10.1101/2023.09.29.560191v1

- Jenne, Helen; Aksoy, Sinan G; Best,

Daniel; Bittner, A; Henselman-Petrusek, Gregory; Joslyn,

Cliff; Myers, Audun; Seppala, Garret; Warley, Jackson;

Young, Stephen J; Purvine, Emilie: (2023) "Stepping

Out of Flatland: Discovering Behavior Patterns as Topological

Structures in Cyber Hypergraphs", The Next Wave, (to

appear)

- Myers, Audun; Bittner, Alyson S; Askoy,

Sinan G; Roek, G; Jenne, Helen; Joslyn, Cliff; Kay, Bill;

Seppala, Garret; Young, Stephen; Purvine, Emilie AH: (2023)

"Malicious

Cyber Activity Detection Using Zigzag Persistence", in: IEEE

Dependeable and Secure Computing Wshop on AI/ML for

Cybersecutiry (AIML 23), https://arxiv.org/abs/2309.080

10

- Joslyn, Cliff A; Ortiz-Munoz, Andres;

Edgar, DRV; Kosheleva, O; Kreinovich, Vladik: (2023) "Causality: Hypergraphs, Matter of

Degree, Foundations of Cosmology", in: Proc. 2023

Annual Conf. North American Fuzzy Information Processing

Society (NAFIPS 2023), https://scholar

works.utep.edu/cs_techrep/1790/

- Zhou, Youjia; Jenne, Helen; Brown,

Davis; Shapiro, M; Jefferson, Brett; Joslyn, Cliff;

Henselman-Petrusek, Gregory; Praggastis, Brenda; Purvine,

Emilie; Wang, Bei: (2023) "Comparing

Mapper Graphs of Artifical Neuron Activations", in: IEEE

Wshop. on Topological Data Analysis and Visualization

(TopoInVis) at IEEE VIS, http://www.sci.utah.edu/~beiwang/publications/Compare_Activations_BeiWang_2023.pdf

- Purvine, Emilie AH; Brown, Davis;

Jefferson, Brett; Joslyn, CA; Praggastis, Brenda; Rathore,

Archit; Shapiro, Madelyn; Wang, Bei; Zhou, Youjia: (2023) "Experimental

Observations of the Topology of Convolutional Neural Network

Activations", in: Proc. AAAI Conf. on Articial

Intelligence (AAAI 23), v. 37:8, pp. 9470-9479, https://doi.org/10.160

9/aaai.v37i8.2613

- Chrisman, Brianna S; Varma, Maya;

Maleki, Sephideh; Brbic, M; Joslyn, CA; Zitnik, Marinka:

(2023) "Graph

Representations and Algorithms in Biomedicine", in: Pacific

Symposium on Biocomputing 2023, ed. RB Altman, L Hunter et

al., pp. 55-60, World Scientific, Sinagpore, https://doi.org/10.1

142/9789811270611_0006

- Myers, Audun; Joslyn, Cliff A; Kay,

Bill; Purvine, EAH; Roek, Gregory; Shapiro, Madelyn: (2023)

"Topological

Analysis of Temporal Hypergraphs", in: Proc. Wshop. on

Analysis of the Web Graph (WAW 2023), Lecture Notes in

Computer Science, v. 13894, pp. 127-146, https://link.springer.com/chapter/10.1007/978-3-031-32296-9_9,

[p/reprint];

and SIAM MODS 2022, https://meetings.siam.org/sess/dsp_talk.cfm?p=123261

- Liu, Xu T; Firoz, Jesun; Lumsdaine, Andrew;

Joslyn, CA; Aksoy, Sinan; Amburg, Ilya; Praggastis, Brenda;

Gebremedhin, Assefaw: (2022) "High-Order

Line Graphs of Non-Uniform Hypergraphs: Algorithms,

Applications, and Experimental Analysis", 36th IEEE

Int. Parallel and Distributed Processing Symp. (IPDPS 22),

https://ieeexplore

.ieee.org/document/9820632, [p/reprint]

- Kay, WW; Aksoy, Sinan G; Baird, Molly;

Best, DM; Jenne, Helen; Joslyn, CA; Potvin, CD; Roek, Greg;

Seppala, Garrett; Young, Stephen; Purvine, Emilie: (2022) "Hypergraph

Topological Features for Autoencoder-Based Intrusion Detection

for Cybersecurity Data", ML4Cyber Wshop., Int. Conf.

Machine Learning 2022, https://icml.cc/Conferences/2022/ScheduleMultitrack?

event=13458#collapse20252, [p/reprint]

- Aksoy, Sinan G; Hagberg, Aric; Joslyn,

Cliff A; Kay, Bill; Purvine, Emilie; Young, Stephen J:

(2022) "Models

and Methods for Sparse (Hyper)Network Science in Business,

Industry, and Government", Notices of the AMS, v.

69:2, pp. 287-291, https://doi.org/10.1090/noti24

24

- Joslyn, Cliff A: (2021) "Finite

Topologies and Hypergraphs: Essential Tools for Network

Science", 2021 Joint Math Meetings, https://meetings.ams.org/data/handout/math/jm

m2021/Paper_3484_handout_724_0.pdf

- Kvinge, Henry; Jefferson, Brett A;

Joslyn, Cliff A; and Purvine, EAH: (2021) "Sheaves

as a Framework for Understanding and Interpreting Model Fit",

Wshop. on Topology, Algebra, and Geometry in Computer Vision,

ICCV 2021, https://openaccess.thecvf.com/content/ICCV2021W/TAG-CV/html/Kvinge_Sheaves_as_a_Framework_for_

Understanding_and_Interpreting_Model_Fit_ICCVW_2021_paper.html

- Feng, Song; Heath, Emily;

Jefferson, Brett; Joslyn, CA; Kvinge, Henry; McDermott,

Jason E; Mitchell, Hugh D; Praggastis, Brenda; Eisfeld, Amie

J; Sims, Amy C; Thackray, Larissa B; Fan, Shufang; Walters,

Kevin B; Halfmann, Peter J; Westhoff-Smith, Danielle; Tan,

Qing; Menachery, Vineet D; Sheahan, Timothy P; Cockrell,

Adam S; Kocher, Jacob F; Stratton, Kelly G; Heller, Natalie

C; Bramer, Lisa M; Diamond, Michael S; Baric, Ralph S;

Waters, Katrina M; Kawaoka, Yoshihiro; Purvine, Emilie:

(2021) "Hypergraph Models of Biological Networks

to Identify Genes Critical to Pathogenic Viral Response", in: BMC Bioinformatics, v. 22:287, https://doi.org/10.1

186/s12859-021-04197-2

- Joslyn,

Cliff A; Aksoy, Sinan; Callahan, Tiffany J; Hunter, LE;

Jefferson, Brett; Praggastis, Brenda; Purvine, Emilie AH;

Tripodi, Ignacio J: (2021) "Hypernetwork Science: From

Multidimensional Networks to Computational Topology", in: Unifying Themes in Complex

systems X: Proc. 10th Int. Conf. Complex Systems, ed. D. Braha et al., pp.

377-392, Springer, https://doi.org/10

.1007/978-3-030-67318-5_25,

[p/reprint]

- Liu, Xu T; Firoz, Jesun; Lumsdaine,

Andrew; Joslyn, CA; Aksoy, Sinan; Praggastis, Brenda;

Gebremedhin, Assefaw: (2021) "Parallel

Algorithms for Efficient Computation of High-Order Line Graphs

of Hypergraphs", in: 2021 IEEE 28th International

Conference on High Performance Computing, Data, and Analytics

(HiPC 2021), https://www.computer.org/csdl/proceedings-articl

e/hipc/2021/101600a312/1Aqyi9gFKpy, [p/reprint]

- Aksoy, Sinan G;

Joslyn, Cliff A; Marrero, Carlos O; Praggastis, B; Purvine,

Emilie AH: (2020) "Hypernetwork

Science

via High-Order Hypergraph Walks", EPJ Data Science,

v. 9:16, https://doi.org/10.1140/epjds/s13688-020-00231-0

- Firoz, Jesun;

Jenkins, LP; Joslyn, CA; Praggastis, B; Purvine, Emilie ;

Raugas, Mark: (2020) "Computing

Hypergraph

Homology in Chapel", in: 2020 IEEE Int. Parallel and

Distributed Processing Symp. Workshops (IPDPSW 20), pp.

667-670, https://doi.org/10.1109/IPDPSW50202.2020.00112,

[p/reprint]

- Joslyn, Cliff A; Charles, Lauren;

Deperno, Chris; Gould, N; Nowak, K ; Praggastis, B ;

Purvine, EA ; Robinson, M ; Strules, J ; Whitney, P: (2020)

"A Sheaf

Theoretical Approach to Uncertainty Quantification of

Heterogeneous Geolocation Information", Sensors,

v. 20:12, pp. 3418, https://doi.org/10.3390/s20123418

- Joslyn,

Cliff A; Aksoy, Sinan; Arendt, Dustin; Firoz, J; Jenkins,

Louis ; Praggastis, Brenda ; Purvine, Emilie AH ; Zalewski,

Marcin: (2020) "Hypergraph

Analytics of Domain Name System Relationships", in: 17th

Wshop. on Algorithms and Models for the Web Graph (WAW 2020),

Lecture Notes in Computer Science, v. 12901, ed.

Kaminski, B et al., pp. 1-15, Springer, https://doi.org/10.1007/978-3-030-48478-1_1,

[p/reprint]

- Joslyn, Cliff A; Aksoy, Sinan; Arendt,

Dustin; Jenkins, L; Praggastis, Brenda; Purvine, Emilie;

Zalewski, Marcin: (2019) "High Performance

Hypergraph Analytics of Domain Name System Relationships",

in: Proc. HICSS Symp. on Cybersecurity Big Data Analytics,

http://www.azsecure-hicss.org/

- Joslyn, Cliff A; Robinson, Michael

and Smart, J; Agarwal, K; Bridgeland, David; Brown, Adam;

Choudhury, Sutanay; Jefferson, Brett; Kraft, Norman;

Praggastis, Brenda; Purvine, Emilie; Smith, William P;

Zarzhitsky, Dimitri: (2019) "Hyperthesis:

Topological

Hypothesis Management in a Hypergraph Knowledgebase", in:

Proc. 2018 Text Analytics Conference (TAC 2018), https://tac.nist.gov/publications/2018/papers.html

- Jenkins, Louis P and Bhuiyan, Tanver;

Harun, Sarah; Lightsey, C; Aksoy, Sinan and Stavenger, Tim;

Zalewski, Marcin; Medal, Hugh; Joslyn, Cliff: (2018) "Chapel

Hypergraph Library (CHGL)", in: 2018 IEEE High

Performance Extreme Computing Conf. (HPEC 2018), IEEE,

Waltham, MA, https://doi.org/10.1109/HPEC.2018.8547520,

[p/reprint]

- Purvine,

Emilie A; Aksoy, Sinan; Joslyn, Cliff A; Nowak, K;

Praggastis, Brenda; Robinson, Michael: (2018) "A

Topological Approach to Representational Data Models", in:

Human Interface and the Management of Information.

Interaction, Visualization, and Analytics (HIMI 2018), Lecture

Notes in Computer Science, v. 10904, ed. S

Yamamoto; H Mori, pp. 90-109, Springer-Verlag, https://doi.org/10.1007/978-3-319-92043-6_8,

[p/reprint]

- Robinson, Michael; Capraro, Chris

and Joslyn, Cliff A; Purvine, EA; Praggastis, Brenda;

Ranshous, Stephen; Sathanur, Arun: (2018) "Local Homology of

Abstract Simplicial Complexes", https://arxiv.org/abs/1805.11547

- Joslyn, Cliff A; Nowak, Kathleen:

(2017) "Ubergraphs:

A

Definition of a Recursive Hypergraph Structure", PNNL

Technical Report PNNL-26402, https://arxiv.org/abs/1704.05547

- Ranshous,

Stephen; Joslyn, Cliff A; Kreyling, Sean; Nowak, K;

Samatova, Nagiza; West, Curtis; Winters, Samuel: (2017) "Exchange

Pattern Mining in the Bitcoin Transaction Directed Hypergraph",

in: Proc. 2017 Conf. Int. Financial Cryptography Association

(Bitcoin 2017), Lecture Notes in Computer Science, v. 10323,

ed. M Brenner et al., pp. 248-263, Springer-Verlag, https://doi.org/10.1007/978-3-319-70278-0_16,

[p/reprint]

- Purvine, Emilie

A; Joslyn, Cliff A; Robinson, Michael: (2017) "A Category Theoretical

Investigation of the Type Hierarchy for Heterogeneous Sensor

Integration", PNNL Technical Report PNNL-25784, https://arxiv.org/abs/1609.02883

- Joslyn, Cliff A; Praggastis, Brenda

and Purvine, Emilie; Sathanur, A; Robinson, Michael;

Ranshous, Stephen: (2016) "Local

Homology

Dimension as a Network Science Measure", in: SIAM

Workshop on Network Science, Abstracts Book, pp. 86-87, https://www.siam.org/meetings/ns16/ns16_abstracts.pdf,

[p/reprint]

- Robinson, Michael; Joslyn, Cliff A;

Hogan, Emilie; and Cprararo, Chris: (2015) "Conglomeration of

Heterogeneous Content Using Local Topology (CHCLT)", American

University

Technical Report No. 2015-1, https://doi.org/10.17606/v2x4-t004

- Joslyn, Cliff A; Hogan, Emilie;

Robinson, Michael: (2014) "Towards a Topological

Framework for Integrating Semantic Information Sources",

in: 2014 Conf. on Semantic Technologies in Intelligence,

Defense and Security (STIDS 2014), CEUR-WS, v. 1304,

pp. 93-96, http://ceur-ws.org/Vol-1304/,

[p/reprint]

- Joslyn, Cliff A: (2000) "Hypergraph-Based

Representations

for Portable Knowledge Management Environments: A White Paper",

Los Alamos Technical Report LAUR = 00-5660, [p/reprint]

Knowledge-Informed Machine Learning and

Neurosymbolic Computing

- Zhou, Youjia; Jenne, Helen; Brown,

Davis; Shapiro, M; Jefferson, Brett; Joslyn, Cliff;

Henselman-Petrusek, Gregory; Praggastis, Brenda; Purvine,

Emilie; Wang, Bei: (2023) "Comparing

Mapper Graphs of Artifical Neuron Activations", in: IEEE

Wshop. on Topological Data Analysis and Visualization

(TopoInVis) at IEEE VIS, http://www.sci.utah.edu/~beiwang/publications/

Compare_Activations_BeiWang_2023.pdf

- Myers, Audun; Bittner, Alyson S; Askoy,

Sinan G; Roek, G; Jenne, Helen; Joslyn, Cliff; Kay, Bill;

Seppala, Garret; Young, Stephen; Purvine, Emilie AH: (2023)

"Malicious

Cyber Activity Detection Using Zigzag Persistence", in: IEEE

Dependeable and Secure Computing Wshop on AI/ML for

Cybersecutiry (AIML 23), https://arxiv.org/abs/2309.080

10

- Purvine, Emilie AH; Brown, Davis;

Jefferson, Brett; Joslyn, CA; Praggastis, Brenda; Rathore,

Archit; Shapiro, Madelyn; Wang, Bei; Zhou, Youjia: (2023) "Experimental

Observations of the Topology of Convolutional Neural Network

Activations", in: Proc. AAAI Conf. on Articial

Intelligence (AAAI 23), v. 37:8, pp. 9470-9479, https://doi.org/10.160

9/aaai.v37i8.2613

- Kay, WW; Aksoy, Sinan G; Baird, Molly;

Best, DM; Jenne, Helen; Joslyn, CA; Potvin, CD; Roek, Greg;

Seppala, Garrett; Young, Stephen; Purvine, Emilie: (2022) "Hypergraph

Topological Features for Autoencoder-Based Intrusion Detection

for Cybersecurity Data", ML4Cyber Wshop., Int. Conf.

Machine Learning 2022, https://icml.cc/Conferences/2022/ScheduleMultitrack?

event=13458#collapse20252, [p/reprint]

Applied Lattice Theory, Formal Concept

Analysis, and Interval Computation

- Rawson,

Michael G; Myers, Audun; Green, Robert; Robinson, M;

Joslyn, Cliff: (2023) "Formal Concept Lattice

Representations and Algorithms for Hypergraphs", https://arxiv.org/abs/2307.116

81

- Myers, Audun; Joslyn, Cliff A; Kay,

Bill; Purvine, EAH; Roek, Gregory; Shapiro, Madelyn: (2023)

"Topological

Analysis of Temporal Hypergraphs", in: Proc. Wshop. on

Analysis of the Web Graph (WAW 2023), Lecture Notes in

Computer Science, v. 13894, pp. 127-146, https://link.springer.com/chapter/10.1007/978-3-031-32296-9_9,

[p/reprint];

and SIAM MODS 2022, https://meetings.siam.org/sess/dsp_talk.cfm?p=123261

- Joslyn, Cliff A; Pogel, Alex and

Purvine, Emilie A: (2017) "Interval-Valued

Rank in Finite Ordered Sets", Order, v. 34:3,

pp. 491-512, https://doi.org/10.1007/s11083-016-9411-2

- Joslyn, Cliff A and Nowak, Kathleen:

(2017) "Ubergraphs:

A

Definition of a Recursive Hypergraph Structure", PNNL

Technical Report PNNL-26402, https://arxiv.org/abs/1704.05547

- Joslyn, Cliff A; Hogan, Emilie;

Pogel, Alex: (2014) "Conjugacy and Iteration

of Standard Interval Rank in Finite Ordered Sets", https://arxiv.org/abs/1409.6684

- Zapata, Francisco; Kreinovich, Vladik;

Joslyn, Cliff A; and Hogan, Emilie: (2013) "Orders on

Intervals Over Partially Ordered Sets: Extending Allen's

Algebra and Interval Graph Results", Soft Computing,

v. 17:8, pp. 1379-1391, https://doi.org/10.1007/s00500-013-1010-1,

[p/reprint]

- Joslyn, Cliff A; Hogan, Emilie;

Paulson, Patrick; Peterson, E; Stephan, Eric; Thomas,

Dennis: (2013) "Order

Theoretical Semantic Recommendation", in: 2nd Int.

Wshop. on Ordering and Reasoning (OrdRing 2013), at ISWC 2013,

CEUR-WS, v. 1059, pp. 9-20, http://ceur-ws.org/Vol-1059/,

[p/reprint]

- Joslyn, Cliff A and Hogan, Emilie:

(2011) "Intervals,

Orders,

and Rank", in: Uncertainty modeling and analysis with

intervals: Foundations, tools, applications (Dagstuhl Seminar

11371), v. 1:9, ed. Elishakoff IE et al., pp.

36-37, Leibniz Informatik, Dagstuhl, DE, http://drops.dagstuhl.de/opus/volltexte/2011/3318,

[p/reprint]

- Joslyn, Cliff A and Hogan, Emilie:

(2010) "Order

Metrics for Semantic Knowledge Systems", in: 5th Int.

Conf. on Hybrid Artificial Intelligence System (HAIS 2010),

Lecture Notes in Artificial Intelligence, v. 6077,

ed. ES Corchado Rogriguez et al., pp. 399-409, Springer-Verlag,

Berlin, https://doi.org/10.1007/978-3-642-13803-4_50,

[p/reprint]

- Orum, Chris and Joslyn, Cliff A:

(2009) "Valuations

and Metrics on Partially Ordered Sets", http://arxiv.org/abs/0903.2679v1

- Joslyn, Cliff A and White, Amanda:

(2009) "Taxonomy Package (TaxPac): An Experimental

Mathematics Environment for Knowledge Systems Analysis", PNNL

Technical Report PNWD-4084, [p/reprint]

- Joslyn, Cliff A: (2009) "Hierarchy

Analysis

of Knowledge Networks", in: IJCAI Int. Workshop on

Graph Structures for Knowledge Representation and Reasoning,

Pasadena, http://hal.archives-ouvertes.fr/docs/00/41/06/51/PDF/gkr-pro

ceedings.PDF, [p/reprint]

- Kaiser, Tim; Schmidt, Stefan and

Joslyn, Cliff: (2008) "Adjusting

Annotated Taxonomies", in: Int. J. of Foundations of

Computer Science, v. 19:2, pp. 345-358, https://doi.org/10.1142/S0129054108005711,

[p/reprint]

- Kaiser, Tim; Schmidt, Stefan and

Joslyn, Cliff: (2008) "Concept

Lattice Representations of Annotated Taxonomies", in: Concept

Lattices and their Applications, Lecture Notes in Computer

Science, v. 4923, ed. SB Yahia et al., pp.

214-225, Springer-Verlag, Berlin, https://doi.org/10.1007/978-3-540-78921-5_14,

[p/reprint]

- Joslyn, Cliff A; Gessler, DDG;

Verspoor, KM: (2007) "Knowledge

Integration in Open Worlds: Utilizing the Mathematics of

Hierarchical Structure", in: Proc. IEEE Int. Conf.

Semantic Computing (ISCS 07), pp. 105-112, IEEE, https://doi.org/10.1109/ICSC.2007.82,

[p/reprint]

- Ceberio, Martine; Ferson, S; Joslyn,

CA; Kreinovich, V; Gang, Xiang: (2006) "Adding Constraints

to Situations When, In Addition to Intervals, We Also Have

Partial Information About Probabilities", in: Proc.

12th GAMM - IMACS Int. Symp. on Scientific Computing, Computer

Arithmetic and Validated Numerics (SCAN 2006), IEEE,

Duisburg, DE, https://doi.org/10.1109/SCAN.2006.7,

[p/reprint]

- Joslyn, Cliff A and Kreinovich,

Vladik: (2005) "Convergence

Properties of an Interval Probabilistic Approach to System

Reliability Estimation", Int. J. General Systems,

v. 34:4, pp. 465-482, https://doi.org/10.1080/03081070500033880,

[p/reprint]

- Joslyn, Cliff A and Bruno, William J:

(2005) "Weighted

Pseudo-Distances for Categorization in Semantic Hierarchies",

in: Conceptual Structures: Common Semantics for Sharing

Knowledge, Lecture Notes in Artificial Ingelligence, v. 3596,

ed. F Dau, M-L Mugnier, G Stum, pp. 381-395, https://doi.org/10.1007/11524564_26,

[p/reprint]

- Joslyn, Cliff A; Oliverira, Joseph;

Scherrer, Chad: (2004) "Order Theoretical Knowledge

Discovery: A White Paper", Los Alamos Technical Report LAUR

= 04-5812, [p/reprint]

- Joslyn, Cliff A; Mniszewski, Susan;

Fulmer, Andy; and Heaton, G: (2004) "The Gene

Ontology Categorizer", Bioinformatics, v. 20:s1,

pp. 169-177, https://doi.org/10.1093/bioinformatics/bth921

- Joslyn, Cliff A and Mniszewski,

Susan: (2004) "Combinatorial

Approaches

to Bio-Ontology Management with Large Partially Ordered Sets",

in:

Proc. SIAM Workshop on Combinatorial Scientific Computing

(CSC 04), San Francisco, https://www.tau.ac.il/~stoledo/csc04/Joslyn.pdf

- Joslyn, Cliff

A and Ferson, Scott: (2004) "Approximate

Representations

of Random Intervals for Hybrid Uncertainty Quantification",

in: Proc. 4th Int. Conf. on Sensitivity Analysis of Model

Output (SAMO 2004), ed. KM Hanson, FM Hemez, pp. 453-469,

Los Alamos National Laboratory, Los Alamos, NM, http://library.lanl.gov/cgi-bin/getdoc?event=SAMO2004

&document=samo04-83.pdf, [p/reprint]

- Joslyn, Cliff A: (2004) "Poset

Ontologies and Concept Lattices as Semantic Hierarchies",

in: Conceptual

Structures at Work, Lecture Notes in Artificial Intelligence,

v. 3127, ed. Wolff, Pfeiffer and Delugach, pp. 287-302,

Springer-Verlag, Berlin, https://doi.org/10.1007/978-3-540-27769-9_19,

[p/reprint]

- Joslyn, Cliff A: (2003) "Multi-Interval

Elicitation

of Random Intervals for Engineering Reliability Analysis",

in: Fourth Int. Symp. on Uncertainty Modeling and Analysis

(ISUMA 2003), IEEE, College Park MD, https://doi.org/10.1109/ISUMA.2003.1236158,

[p/reprint]

- Voss, Susan and Joslyn, Cliff: (2002)

"Advanced Knowledge Integration in Assessing Terrorist

Threats", Los Alamos Technical Report LAUR 02-7867, [p/reprint]

- Joslyn, Cliff A and Mniszewski,

Susan: (2002) "Relational Analytical Tools:

DataDelver and Formal Concept Analysis", Los Alamos

Technical Report LAUR = 02-7697, [p/reprint]

- Joslyn, Cliff A: (2001) "Measures of

Distortion in Possibilistic Approximations of Consistent

Random Sets and Intervals", in: Proc. 2001 Joint

International Fuzzy Systems Association World Congress and

North American Fuzzy Information Processing Society Int. Conf.,

pp. 1735-1740, https://doi.org/10.1109/NAFIPS.2001.943814,

[p/reprint]

- Joslyn, Cliff A: (1997) "Distributional

Representations

of Random Interval Measurements", in: Uncertainty

Analysis in Engineering and Sciences: Fuzzy Logic, Statistics,

and Neural Network Approach, ed. Bilal Ayyub and Madan

Gupta, pp. 37-52, Springer, Boston, https://doi.org/10.1007/978-1-4615-5473-8_3,

[p/reprint]

- Joslyn, Cliff A: (1997) "Possibilistic

Normalization

of Inconsistent Random Intervals", Advances in Systems

Science and Applications, v. special, ed. Wansheng

Tang, pp. 44-51, [p/reprint]

- Joslyn, Cliff A: (1991) "Hierarchy

and

Strict Hierarchy in General Information Theory", in: Proc.

1991 Conf. of the Int. Society for the Systems Sciences (ISSS

91), v. 1, pp. 123-132, \"Ostersund, SE, (Winner,

1991 Vickers Memorial Award), [p/reprint]

Generalized Information Theory,

Uncertainty Quantification, and Possibilistic Information Theory

- Reyes,

Hector A; Joslyn, Cliff and Kreinovich, Vladik: (2023) "Graph

Approach to Uncertainty Quantification", Deep Learning

and Other Soft Computing Techniques. Studies in Computational

Intelligence, v. 1097, pp. 253-283, Springer, https://doi.org/10

.1007/978-3-031-29447-1_23

- Joslyn, Cliff A; Ortiz-Munoz, Andres;

Edgar, DRV; Kosheleva, O; Kreinovich, Vladik: (2023) "Causality: Hypergraphs, Matter of

Degree, Foundations of Cosmology", in: Proc. 2023

Annual Conf. North American Fuzzy Information Processing

Society (NAFIPS 2023), https://scholar

works.utep.edu/cs_techrep/1790/

- Joslyn, Cliff A and Weaver, Jesse:

(2013) "Focused

Belief Measures for Uncertainty Quantification in High

Performance Semantic Analysis", in: 2013 Conf. on

Semantic Technologies in Defense and Security (STIDS 2013),

CEUR-WS, v. 1097, pp. 18-24, http://ceur-ws.org/Vol-1097,

[p/reprint]

- Ceberio, Martine; Ferson, S; Joslyn,

CA; Kreinovich, V; Gang, Xiang: (2006) "Adding Constraints

to Situations When, In Addition to Intervals, We Also Have

Partial Information About Probabilities", in: Proc.

12th GAMM - IMACS Int. Symp. on Scientific Computing, Computer

Arithmetic and Validated Numerics (SCAN 2006), IEEE,

Duisburg, DE, https://doi.org/10.1109/SCAN.2006.7,

[p/reprint]

- Joslyn, Cliff A and Kreinovich,

Vladik: (2005) "Convergence

Properties of an Interval Probabilistic Approach to System

Reliability Estimation", Int. J. General Systems,

v. 34:4, pp. 465-482, https://doi.org/10.1080/03081070500033880,

[p/reprint]

- Joslyn, Cliff A and Booker, Jane:

(2005) "Generalized

Information

Theory for Engineering Modeling and Simulation", in: Engineering

Design Reliability Handbook, ed. E Nikolaidis et al., pp.

9:1-40, CRC Press, https://www.taylorfrancis.com/books/e/9780

429204616/chapters/10.1201/9780203483930-14, [p/reprint]

- Ross, Timothy; Booker, Jane M; Hemez,

Francois M; Anderson, MC; Reardon, Brian J; Joslyn, Cliff:

(2004) "Quantifying Total Uncertainty Using

Different Mathematical Theories in a Validation Assessment", in:

Proc. 9th ASCE Joint Specialty Conf. on Probabilistic

Mechanics and Structural Reliability, pp. 99, Albuquerque,

[p/reprint]

- Oberkampf, WL; Helton, JC; Joslyn, CA;

Wojtkiewicz, SF; Ferson, Scott: (2004) "Challenge

Problems: Uncertainty in System Response Given Uncertain

Parameters", Reliability Engineering and System Safety,

v. 85:1-3, pp. 11-20, https://doi.org/10.1016/j.ress.2004.03.002,

[p/reprint]

- Joslyn, Cliff

A and Ferson, Scott: (2004) "Approximate

Representations

of Random Intervals for Hybrid Uncertainty Quantification",

in: Proc. 4th Int. Conf. on Sensitivity Analysis of Model

Output (SAMO 2004), ed. KM Hanson, FM Hemez, pp. 453-469,

Los Alamos National Laboratory, Los Alamos, NM, http://library.lanl.gov/cgi-bin/getdoc?event=SAMO2004

&document=samo04-83.pdf, [p/reprint]

- Joslyn, Cliff A; Buehring, W; Kaplan,

PG; and Powell, D: (2004) "Critical Infrastructure

Protection Decision Support System (CIP/DSS): Addressing

Uncertainty and Risk", Los Alamos National Laboratory

Technical Report LAUR 04-6720, [p/reprint]

- Joslyn, Cliff A: (2004) "GIT

Analysis of the Crushable Foam Experiment and Simulations", Los

Alamos

Technical Report LAUR 04-6207, [p/reprint]

- Ferson, Scott; Joslyn, CA; Helton, JC;

Oberkampf, WL; Sentz, K: (2004) "Summary of

the Epistemic Uncertainty Workshop: Consensus Amid Diversity",

Reliability Engineering and Systems Safety, v. 85:1-3,

pp. 355-369, https://doi.org/10.1016/j.ress.2004.03.023,

[p/reprint]

- Booker, JM; Ross, TM; Rutherford,

Amanda C; Reardon, BJ; Hemez, Francois; Anderson, Mark C;

Doebling, Scott W; Joslyn, Cliff A: (2004) "An

Engineering Perspective on UQ for Validation, Reliability, and

Certification", in: Proc. Foundations ’04 Workshop for

Verification, Validation, and Accreditation (VV&A) in the

21st Century, [p/reprint]

- Joslyn, Cliff A: (2003) "Multi-Interval

Elicitation

of Random Intervals for Engineering Reliability Analysis",

in: Fourth Int. Symp. on Uncertainty Modeling and Analysis

(ISUMA 2003), IEEE, College Park MD, https://doi.org/10.1109/ISUMA.2003.1236158,

[p/reprint]

- Oberkampf, WL; Helton, JC;

Wojtkiewicz, Steve; Joslyn, CA; Ferson, Scott: (2002) "Epistemic

Uncertainty Workshop", in: Reliable Computing, v.

8:6, pp. 503-505, https://doi.org/10.1023/A:1021372829139,

[p/reprint]

- Joslyn, Cliff A and Helton, Jon C:

(2002) "Bounds on

Plausibility and Belief of Functionally Propagated Random Sets",

in: Proc 2002 Annual Meeting of the North American Fuzzy

Information Processing Society (NAFIPS-FLINT 2002), pp.

412-417, https://doi.org/10.1109/NAFIPS.2002.1018095,

[p/reprint]

- Ross, Timothy; Kreinovich, Vladik;

Joslyn, Cliff: (2001) "Assessing

the Predictive Accuracy of Complex Simulation Models", in:

Proc.

2001 Joint International Fuzzy Systems Association World

Congress and North American Fuzzy Information Processing

Society Int. Conf., pp. 2008-2012, https://doi.org/10.1109/NAFIPS.2001.944376,

[p/reprint]

- Joslyn, Cliff A: (2001) "Measures of

Distortion in Possibilistic Approximations of Consistent

Random Sets and Intervals", in: Proc. 2001 Joint

International Fuzzy Systems Association World Congress and

North American Fuzzy Information Processing Society Int. Conf.,

pp. 1735-1740, https://doi.org/10.1109/NAFIPS.2001.943814,

[p/reprint]

- Joslyn, Cliff A: (1999) "Possibilistic

Systems

Theory Within a General Information Theory", in: 1999

Workshop on Imprecise Probabilities and their Applications,

ed. G. de Cooman et al., pp. 206-215, ftp://decsai.ugr.es/pub/utai/other/smc/isipta99/064.pdf

- Joslyn, Cliff A and Rocha, Luis:

(1998) "Towards

a Formal Taxonomy of Hybrid Uncertainty Representations",

Information Sciences, v. 110:3-4, pp. 255-277, https://doi.org/10.1016/S0020-0255(98)00011-5,

[p/reprint]

- Joslyn, Cliff A: (1997) "Measurement

of Possibilistic Histograms from Interval Data", Int.

J. General Systems, v. 26:1-2, pp. 9-33, https://doi.org/10.1080/03081079708945167,

[p/reprint]

- Joslyn, Cliff A: (1997) "Towards

General Information Theoretical Representations of Database

Problems", in: Proc. 1997 Conf. of the IEEE Society

for Systems, Man and Cybernetics: Computational Cybernetics

and Simulation, v. 2, pp. 1662-1667, Orlando, https://doi.org/10.1109/ICSMC.1997.638247,

[p/reprint]

- Joslyn, Cliff A: (1997) "Distributional

Representations

of Random Interval Measurements", in: Uncertainty

Analysis in Engineering and Sciences: Fuzzy Logic, Statistics,

and Neural Network Approach, ed. Bilal Ayyub and Madan

Gupta, pp. 37-52, Springer, Boston, https://doi.org/10.1007/978-1-4615-5473-8_3,

[p/reprint]

- Joslyn, Cliff A: (1997) "Possibilistic

Normalization

of Inconsistent Random Intervals", Advances in Systems

Science and Applications, v. special, ed. Wansheng

Tang, pp. 44-51, [p/reprint]

- Joslyn, Cliff A and Henderson, Scott:

(1996) "CAST Extensions to

DASME to Support Generalized Information Theory", in: Computer-Aided

Systems Technology --- EuroCAST '95, Lecture Notes in Computer

Science, v. 1030, ed. Franz Pichler, pp. 237-252,

Springer-Verlag, Berlin, https://doi.org/10.1007/BFb0034764,

[p/reprint]

- Joslyn, Cliff A: (1996) "Hybrid

Methods to Represent Incomplete and Uncertain Information", in:

Proc. 1996 Interdisciplinary Conf. on Intelligent Systems: A

Semiotic Perspective, ed. J. Albus, A. Meystel et al., pp.

133-140, NIST, Gaithersburg MD, [p/reprint]

- Joslyn, Cliff A: (1996) "Aggregation

and Completion of Random Sets with Distributional Fuzzy

Measures", Int. J. of Uncertainty, Fuzziness, and

Knowledge-Based Systems, v. 4:4, pp. 307-329, https://doi.org/10.1142/S0218488596000184,

[p/reprint]

- Joslyn, Cliff A: (1996) "An

Object-Oriented Architecture for Possibilistic Models",

in: Computer-Aided

Systems Technology, Lecture Notes in Computer Science, v.

1105, ed. T. \"Oren and G. Klir, pp. 80-94,

Springer-Verlag, Berlin, https://doi.org/10.1007/3-540-61478-8_69,

[p/reprint]

- Joslyn, Cliff A: (1995) "Strong

Probabilistic Compatibility of Possibilistic Histograms", in: Proc.

1995

Joint Int. Symposium on Uncertainty Modeling and Analysis and

Conf. of the North American Fuzzy Information Processing

Society (ISUMA/NAFIPS 95), ed. Bilal Ayyub, pp. A17-22,

IEEE Comp. Soc. Pres, Los Alamitos CA, [p/reprint]

- Joslyn, Cliff A: (1995) "In Support of an

Independent Possibility Theory", in: Foundations and

Applications of Possibility Theory, ed. G de Cooman et

al., pp. 152-164, World Scientific, Singapore, https://doi.org/10.1142/2775,

[p/reprint]

- Joslyn, Cliff A: (1994) "Aggregation

and

Completion in Probability and Possibility Theory", in: Proc.

1994 Joint Conf. on Information Sciences, ed. PP Wang, pp.

333-336, Pinehurst NC, (Extended abstract), [p/reprint]

- Joslyn, Cliff A:

(1994) "A

Possibilistic Approach to Qualitative Model-Based Diagnosis",

Telematics and Informatics, v. 11:4, pp. 365-384,

https://doi.org/10.1016/0736-5853(94)90026-4,

[p/reprint]

- Joslyn, Cliff A: (1994) "On

Possibilistic Automata", in: Computer Aided Systems

Theory---EUROCAST '93, Lecture Notes in Computer Science,

v. 763, ed. F. Pichler and R. Moreno-Di\'az, pp.

231-242, Springer-Verlag, Berlin, https://doi.org/10.1007/3-540-57601-0_53,

[p/reprint]

- Joslyn, Cliff A: (1994) Possibilistic

Processes

for Complex Systems Modeling, State University of New

York, Binghamtom NY, (PhD dissertation), [p/reprint]

- Joslyn, Cliff A: (1994) "Qualitative

Model-Based Diagnosis Using Possibility Theory", in: Proc.

1994 Goddard Conf. on Space Applications of Artificial

Intelligence, pp. 269-283, http://hdl.handle.net/2060/19940030559

- Joslyn, Cliff A: (1993) "Empirical

Possibility and Minimal Information Distortion", in: Fuzzy

Logic:

State of the Art, ed. R Lowen and M Roubens, pp. 143-152,

Kluwer, Dordrecht, https://doi.org/10.1007/978-94-011-2014-2_14,

[p/reprint]

- Joslyn, Cliff A: (1993) "Some

New Results on Possibilistic Measurement", in: Proc. 1993

Conf. of the North American Fuzzy Information Processing

Society (NAFIPS 1993), pp. 227-231, Allentown PA, [p/reprint]

- Joslyn, Cliff A: (1993) "Possibilistic

Semantics and Measurement Methods in Complex Systems", in:

Proc. 2nd Int. Symposium on Uncertainty Modeling and Analysis

(ISUMA 1993), ed. Bilal Ayyub, pp. 208-215, IEEE Computer

Soc., https://doi.org/10.1109/ISUMA.1993.366766,

[p/reprint]

- Horstkotte, Erik; Joslyn, Cliff A;

Kantrowitz, Mark: (1993) Answers

to

Frequently Asked Questions on comp.ai.fuzzy,

https://www.cs.cmu.edu/Groups/AI/html/faqs/ai/fuzzy/part1/faq.html

- Joslyn, Cliff A and Klir, George:

(1992) "Minimal

Information Loss Possibilistic Approximations of Random Sets",

in: Proc.

1992 IEEE Int. Conf. on Fuzzy Systems (FUZZ-IEEE 92), ed.

Jim Bezdek, pp. 1081-1088, IEEE, San Diego, https://doi.org/10.1109/FUZZY.1992.258698,

[p/reprint]

- Joslyn, Cliff A: (1992) "Possibilistic

Measurement

and Set Statistics", in: Proc. 1992 Conf. of the North

American Fuzzy Information Processing Society (NAFIPS 1992),

v. 2, pp. 458-467, Puerto Vallerta, [p/reprint]

- Joslyn, Cliff A: (1991) "Hierarchy

and

Strict Hierarchy in General Information Theory", in: Proc.

1991 Conf. of the Int. Society for the Systems Sciences (ISSS

91), v. 1, pp. 123-132, \"Ostersund, SE, (Winner,

1991 Vickers Memorial Award), [p/reprint]

- Joslyn, Cliff A: (1991) "Towards

an

Empirical Semantics of Possibility Through Maximum Uncertainty",

in: Proc. 4th World Congress of the Int. Fuzzy Systems

Association: Artificial Intelligence (IFSA 91), v. A,

pp. 86-89, Brussels, (extended abstract), [p/reprint]

Semantic Technology, Ontology Metrics, and

Knowledge Representation

- Chrisman, Brianna S; Varma, Maya;

Maleki, Sephideh; Brbic, M; Joslyn, CA; Zitnik, Marinka:

(2023) "Graph

Representations and Algorithms in Biomedicine", in: Pacific

Symposium on Biocomputing 2023, ed. RB Altman, L Hunter et

al., pp. 55-60, World Scientific, Sinagpore, https://doi.org/10.1

142/9789811270611_0006

- Joslyn, Cliff A; Robinson, Michael;

Smart, J; Agarwal, K; Bridgeland, David; Brown, Adam;

Choudhury, Sutanay; Jefferson, Brett; Kraft, Norman;

Praggastis, Brenda; Purvine, Emilie; Smith, William P;

Zarzhitsky, Dimitri: (2019) "Hyperthesis:

Topological

Hypothesis Management in a Hypergraph Knowledgebase", in:

Proc. 2018 Text Analytics Conference (TAC 2018), https://tac.

nist.gov/publications/2018/papers.html

- Sathanur,

Arun; Choudhury, Sutanay; Joslyn, Cliff A; and Purohit,

Sumit: (2017) "When Labels

Fall Short: Property Graph Simulation via Blending of Network

Structure and Vertex Attributes", in: Proc. ACM Int.

Conf. on Information and Knowledge Management (CIKM 2017),

pp. 2287-2290, ACM, Singapore, https://doi.org/10.1145/3132847.3133065,

[p/reprint]

- Joslyn, Cliff A and Weaver, Jesse:

(2013) "Focused

Belief Measures for Uncertainty Quantification in High

Performance Semantic Analysis", in: 2013 Conf. on

Semantic Technologies in Defense and Security (STIDS 2013),

CEUR-WS, v. 1097, pp. 18-24, http://ceur-ws.org/Vol-1097,

[p/reprint]

- Joslyn, Cliff A; Hogan, Emilie;

Paulson, Patrick; Peterson, E; Stephan, Eric; Thomas,

Dennis: (2013) "Order

Theoretical Semantic Recommendation", in: 2nd Int.

Wshop. on Ordering and Reasoning (OrdRing 2013), at ISWC 2013,

CEUR-WS, v. 1059, pp. 9-20, http://ceur-ws.org/Vol-1059/,

[p/reprint]

- Gessler, DDG; Joslyn, CA and Verspoor,

KM: (2013) "A Posteriori Ontology

Engineering for Data-Driven Science", in: Data Intensive

Science, ed. K Kleese-van Dam, T Critchlow, pp. 215-244,

CRC Press, [p/reprint]

- Baker, Nathan; Barr, Jonathan L;

Bonheyo, George; Joslyn, CA; Krishnaswami, Kannan; Oxley,

Mark and Quadrel, Rich; Sego, Landon; Tardiff, Mark; Wynne,

Adam: (2013) "Research

Towards a Systematic Signature Discovery Process", in: 2013

IEEE

Int. Conf. on Intelligence and Security Informatics (ISI 2013),

pp. 301-308, IEEE, https://doi.org/10.1109/ISI.2013.6578848,

[p/reprint]

- Zhang, Guo-Qiang; Luo, Lingyun;

Ogbuji, Chime; Joslyn, CA; Mejino, Jose; Sahoo, Satya:

(2012) "An

Analysis

of Multi-type Relational Interactions in FMA Using Graph

Motifs", in: AMIA 2012 Symp. Proc., pp. 1060-1069,

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3540524/

- Burk, Robin; Chappell, Alan; Gregory,

Michelle; Joslyn, CA; McGrath, Liam: (2012) "Pattern

Discovery Using Semantic Network Analysis", in: 3rd

Int. Wshop. Cognitive Information Processing (CIP 2012),

IEEE, https://doi.org/10.1109/CIP.2012.6232917,

[p/reprint]

- Joslyn, Cliff A; Haglin, David J;

Hogan, Emilie; Heredia-Langner, A; Paulson, Patrick P;

White, Amanda: (2011) "Measuring Semantic

Dispersion in Zymurgy", PNNL Technical Report PNNL-24142,

[p/reprint]

- Joslyn, Cliff A; Al-Saffar, Sinan;

Purohit, Sumit: (2011) "A Typed Path Metric in

Semantic Graphs", PNNL Technical Report PNWD-4299, [p/reprint]

- Joslyn, Cliff A; Al-Saffar, Sinan;

Haglin, David; and Holder, Lawrence: (2011) "Combinatorial

Information Theoretical Measurement of the Semantic Significance

of Semantic Graph Motifs", in: Mining Data Semantic Workshop

(MDS 2011), SIGKDD 2011, [p/reprint]

- Burk, Robin; Chappell, Alan; Davis,

Mark; Gregory, M; Joslyn, Cliff; McGrath, Liam; Morara,

Michele; Rust, Steve: (2011) "Semantic

Network Analysis for Evidence Evaluation: The Threat

Anticipation Platform", in: 2011 IEEE Network Science

Workshop, pp. 162-166, IEEE, https://doi.org/10.1109/NSW.2011.6004641,

[p/reprint]

- al-Saffar, Sinan; Joslyn, Cliff A;

Chappell, Alan: (2011) "Structure

Discovery in Large Semantic Graphs Using Extant Ontological

Scaling and Descriptive Statistics", in: IEEE/WIC/ACM

Int. Conf. on Web Intelligence and Intelligent Agent

Technology (WI-IAT), v. 1, pp. 211-218, IEEE,

Lyon, https://doi.org/10.1109/WI-IAT.2011.241,

[p/reprint]

- Kleese van Dam, Kerstin; Joslyn, Cliff

A; McCue, Lee Ann; Lansing, C; Guillen, Zoe; Corrigan,

Abbie; Cannon, William; Anderson, Gordon; Romine, Margaret:

(2010) "Final Evaluation Report for the Semantic

Driven Knowledge Discovery; Integration in the System Biology

Knowledgebase Projects", PNNL Technical Report PNNL-SA-75957,

[p/reprint]

- Joslyn, Cliff A and Hogan, Emilie:

(2010) "Order

Metrics for Semantic Knowledge Systems", in: 5th Int.

Conf. on Hybrid Artificial Intelligence System (HAIS 2010),

Lecture Notes in Artificial Intelligence, v. 6077,

ed. ES Corchado Rogriguez et al., pp. 399-409, Springer-Verlag,

Berlin, https://doi.org/10.1007/978-3-642-13803-4_50,

[p/reprint]

- Joslyn, Cliff A and White, Amanda:

(2009) "Taxonomy Package (TaxPac): An Experimental

Mathematics Environment for Knowledge Systems Analysis", PNNL

Technical Report PNWD-4084, [p/reprint]

- Joslyn, Cliff A and Paulson,

Patrick: (2009) "Hierarchical Analysis of the Omega

Ontology", PNNL Technical Report PNNL-19041, [p/reprint]

- Joslyn, Cliff A; Paulson, Patrick;

White, Amanda: (2009) "Measuring the Structural

Preservation of Semantic Hierarchy Alignments", in: Proc.

4th Int. Wshop. on Ontology Matching (OM-2009), CEUR-WS,

v. 551, http://ceur-ws.org/Vol-551/,

[p/reprint]

- Joslyn, Cliff A; Baddeley, Bob; Blake,

Judith; Bult, C; Dolan, M; Riensche, R; Rodland, K and

Sanfilippo, A; White, A: (2009) "Automated

Annotation-Based Bio-Ontology Alignment with Structural

Validation", in: Proc. Int. Conf. on Biomedical

Ontology (ICBO 09), Nature Precedings, ed. Barry Smith,

75-78, https://doi.org/10.1038/npre.2009.3518.1

- Joslyn, Cliff A: (2009) "Hierarchy

Analysis

of Knowledge Networks", in: IJCAI Int. Workshop on

Graph Structures for Knowledge Representation and Reasoning,

Pasadena, http://hal.archives-ouvertes.fr/docs/00/41/06/51/PDF/gkr-pro

ceedings.PDF, [p/reprint]

- Euzenat, J\'er\^ome; Ferrara, Alfio

and Hollink, P; Isaac, A; Joslyn, Cliff; Malais\'e,

V\'eronique; Meilicke, Christian; Nikolov, Andriy; Pane,

Juan and Sabou, Marta; Scharffe, Francois; Shvaiko, Pavel;

Spiliopoulos, Vassilis; Stuckenschmidt, Heiner;

Iv\'ab-Zamazal, Ondrej; Sv\'atek, Vojtech; dos Santos,

C\'assia; Vouros, George; Wang, Shenghui: (2009) "Results of the Ontology

Alignment Evaluation Initiative 2009", in: Proc. 4th

Int. Wshop. on Ontology Matching (OM-2009), CEUR-WS, v. 551,

http://ceur-ws.org/Vol-551/,

[p/reprint]

- Chappell, Alan; Bladek, Anthony;

Joslyn, CA; Marshall, E; McGrath, Liam; Paulson, Patrick;

Stolberg, Sean; White, Amanda: (2009) "Supporting the Analytic

Knowledge Manager: Formal Methods for Ontology Display and

Management", in: 2009 Conf. on Ontologies for the

Intelligence Community (OIC 2009), CEUR-WS, v. 555,

Fairfax, VA, http://ceur-ws.org/Vol-555/,

[p/reprint]

- Kaiser, Tim; Schmidt, Stefan and

Joslyn, Cliff: (2008) "Concept

Lattice Representations of Annotated Taxonomies", in: Concept

Lattices and their Applications, Lecture Notes in Computer

Science, v. 4923, ed. SB Yahia et al., pp.

214-225, Springer-Verlag, Berlin, https://doi.org/10.1007/978-3-540-78921-5_14,

[p/reprint]

- Joslyn, Cliff A; Paulson, Patrick;

Verspoor, KM: (2008) "Exploiting Term

Relations for Semantic Hierarchy Construction", in: Proc.

Int. Conf. Semantic Computing (ICSC 08), pp. 42-49, IEEE

Computer Society, Los Alamitos CA, https://doi.org/10.1109/ICSC.2008.68,

[p/reprint]

- Joslyn, Cliff A; Gregory, Michelle;

McGrath, Liam; Paulson, P; Verspoor, KM: (2008) "Semantic

Hierarchies:

Induction, Measurement, and Management", in: 2008 NSF

Symp. on Semantic Knowledge Discovery, Organization and Use,

Courant Inst., NYU, New York, https://nlp.cs.nyu.edu/sk-symposium/note/P-21.pdf,

(poster)

- Joslyn, Cliff A; Donaldson, Alex;

Paulson, Patrick: (2008) "Evaluating the Structural

Quality of Semantic Hierarchy Alignments", in: Proc.

Poster and Demonstration Session at the 7th Int. Semantic Web

Conference (ISWC2008), CEUR-WS, v. 401, http://ceur-ws.org/Vol-401/,

[p/reprint]

- Joslyn, Cliff A; Gessler, DDG;

Verspoor, KM: (2007) "Knowledge

Integration in Open Worlds: Utilizing the Mathematics of

Hierarchical Structure", in: Proc. IEEE Int. Conf.

Semantic Computing (ISCS 07), pp. 105-112, IEEE, https://doi.org/10.1109/ICSC.2007.82,

[p/reprint]

- Verspoor, KM; Cohn, JD; Mniszewski,

SM; and Joslyn, CA: (2006) "A Categorization

Approach to Automated Ontological Function Annotation", Protein

Science, v. 15:6, pp. 1544-1549, https://doi.org/10.1110/ps.062184006

- Joslyn, Cliff A; Mniszewski, SM;

Smith, SA; and Weber, PM: (2006) "SpindleViz: A

Three Dimensional, Order Theoretical Visualization Environment

for the Gene Ontology", in: Joint BioLINK and 9th

Bio-Ontologies Meeting (JBB 06), [p/reprint]

- Joslyn, Cliff A; Gessler, DDG;

Schmidt, SE; and Verspoor, KM: (2006) "Distributed

Representations of Bio-Ontologies for Semantic Web Services",

in: Joint BioLINK and 9th Bio-Ontologies Meeting (JBB 06),

[p/reprint]

- Gessler, DDG; Joslyn, CA; Verspoor,

KM; and Schmidt, SE: (2006) "Deconstruction,

Reconstruction, and Ontogenesis for Large, Monolithic, Legacy

Ontologies in Semantic Web Service Applications", Los Alamos

Technical Report 06-5859, [p/reprint]

- Joslyn, Cliff A; Verspoor, Karin and

Rodriguez, Marko: (2006) "Representing the M and I

Semantic Space: Concept Description for Lexical Management in

Support of Ontology Development", Los Alamos Technical

Report LAUR 06-1060, pp. 109-125

- Verspoor, KM; Joslyn, CA; Ambrosiano,

JA; B\"acker, A; Bodenreider, O; Hirschman, L; Karp, P;

Kelly, H; Loranger, S; Musen, M; Sriram, R; , C Wroe: (2005)

"Knowledge

Integration

for Biothreat Response", Los Alamos Technical Report

LAUR = 05-0907, http

s://www.lhncbc.nlm.nih.gov/system/files/pub2005035.pdf

- Verspoor, KM; Cohn, J; Joslyn, CA;

Mniszewski, SM; Rechtsteiner, Andreas; Rocha, Luis M; Simas,

Tiago: (2005) "Protein

Annotation as Term Categorization in the Gene Ontology Using

Word Proximity Networks", in: BMC Bioinformatics,

v. 6:s1, https://dx.doi.org/10.1186%2F1471-2105-6-S1-S20

- Verspoor, KM; Cohn, JD; Mniszewski,

SM; and Joslyn, CA: (2005) "POSOLE:

Automated

Ontological Annotation for Function Prediction", in: Proc.

Automated Function Prediction SIG, ISMB 05, https://www.biofunctionprediction.org/afp_programs/afp-2005-p

rogram.pdf, [p/reprint]

- Joslyn, Cliff A; Cohen, Judith;

Verspoor, KM; and Mniszewski, SM: (2005) "Automating

Ontological Function Annotation: Towards a Common Methodological

Framework", in: Proc. Eighth Annual Bio-Ontologies Meeting,

ISMB 2005, Detroit, [p/reprint]

- Joslyn, Cliff A and Bruno, William J:

(2005) "Weighted

Pseudo-Distances for Categorization in Semantic Hierarchies",

in: Conceptual Structures: Common Semantics for Sharing

Knowledge, Lecture Notes in Artificial Ingelligence, v. 3596,

ed. F Dau, M-L Mugnier, G Stum, pp. 381-395, https://doi.org/10.1007/11524564_26,

[p/reprint]

- Joslyn, Cliff A; Mniszewski, Susan;

Fulmer, Andy; and Heaton, G: (2004) "The Gene

Ontology Categorizer", Bioinformatics, v. 20:s1,

pp. 169-177, https://doi.org/10.1093/bioinformatics/bth921

- Joslyn, Cliff A and Mniszewski,

Susan: (2004) "Combinatorial

Approaches

to Bio-Ontology Management with Large Partially Ordered Sets",

in:

Proc. SIAM Workshop on Combinatorial Scientific Computing

(CSC 04), San Francisco, https://www.tau.ac.il/~stoledo/csc04/Joslyn.pdf

- Joslyn, Cliff A: (2004) "Poset

Ontologies and Concept Lattices as Semantic Hierarchies",

in: Conceptual

Structures at Work, Lecture Notes in Artificial Intelligence,

v. 3127, ed. Wolff, Pfeiffer and Delugach, pp. 287-302,

Springer-Verlag, Berlin, https://doi.org/10.1007/978-3-540-27769-9_19,

[p/reprint]

- Verspoor, KM; Joslyn, Cliff and

Papcun, George: (2003) "The Gene Ontology as a

Source of Lexical Semantic Knowledge for a Biological Natural

Language Processing Application", in: Workshop on Text

Analysis and Search for Bioinformatics (SIGIR 2003), pp.

51-56, [p/reprint]

- Joslyn, Cliff A: (2000) "Hypergraph-Based

Representations

for Portable Knowledge Management Environments: A White Paper",

Los Alamos Technical Report LAUR = 00-5660, [p/reprint]

- Joslyn, Cliff A and Kantor, M:

(1997) "Semantic Repsresentations for

Collaborative, Distributed Scientific Information Systems", LAUR=97-2398,

[p/reprint]

- Joslyn, Cliff A: (1996) "Semantic

Webs:

A Cyberspatial Representational Form for Cybernetics", in:

Proc.

1996 European Conf. on Cybernetics and Systems Research,

v. 2, ed. R. Trappl, pp. 905-910, https://www.tib.eu/en/se

arch/id/BLCP%3ACN016275607/Semantic-Webs-A-Cyberspatial-represe

ntational-Form/, [p/reprint]

- Joslyn, Cliff A: (1995) "Semantic

Control Systems", World Futures: The Journal of

General Evolution, v. 45:1-4, pp. 87-123, https://doi.org/10.1080/02604027.1995.9972555,

[p/reprint]

- Joslyn, Cliff A; Heylighen, Francis;

Turchin, Valentin: (1993) "Synopsis of the

Principia Cybernetica Project", in: Proc. 13th Int. Congress

on Cybernetics, ed. J. Ramaekers, pp. 509-513, Int. Assoc.

Cybernetics, Namur, [p/reprint]

- Heylighen, Francis; Joslyn, Cliff;

Turchin, Valentin: (1991) "A

Short Introduction to the Principia Cybernetic Project", J.

Ideas, v. 2:1, pp. 26-29, ftp://ftp.vub.ac.be/pub/projects/Principia_Cyber

netica/Texts_General/Short_Intro.txt

Computational and Theoretical Biology,

Bio-Ontologies, and Ontological Protein Function Annotation

- Colby, Sean;

Shapiro, Madelyn; Bilbao, Aivett; Broeckling, C; Lin, Andy;

Purvine, Emilie; Joslyn, Cliff A: (2023) "Introducing

Molecular Hypernetworks for Discovery in Multidimensional

Metabolomics Data", J Proteome Research, https://www.biorxiv.org/content/10.1101/2023.09.29.560191v1

- Chrisman, Brianna S; Varma, Maya;

Maleki, Sephideh; Brbic, M; Joslyn, CA; Zitnik, Marinka:

(2023) "Graph

Representations and Algorithms in Biomedicine", in: Pacific

Symposium on Biocomputing 2023, ed. RB Altman, L Hunter et

al., pp. 55-60, World Scientific, Sinagpore, https://doi.org/10.1

142/9789811270611_0006

- Joslyn, Cliff

A: (2021) "Semiotic and

Physical Requirements on Emergent Autogenic System", Biosemiotics,

v. 14, pp. 665-667, https://doi.org/10.1

007/s12304-021-09469-1, [p/reprint]

- Feng,

Song; Heath, Emily; Jefferson, Brett; Joslyn, CA; Kvinge,

Henry; McDermott, Jason E; Mitchell, Hugh D; Praggastis,

Brenda; Eisfeld, Amie J; Sims, Amy C; Thackray, Larissa B;

Fan, Shufang; Walters, Kevin B; Halfmann, Peter J;

Westhoff-Smith, Danielle; Tan, Qing; Menachery, Vineet D;

Sheahan, Timothy P; Cockrell, Adam S; Kocher, Jacob F;

Stratton, Kelly G; Heller, Natalie C; Bramer, Lisa M;

Diamond, Michael S; Baric, Ralph S; Waters, Katrina M;

Kawaoka, Yoshihiro; Purvine, Emilie: (2021) "Hypergraph Models of Biological Networks

to Identify Genes Critical to Pathogenic Viral Response", in: BMC Bioinformatics, v. 22:287, https://doi.org/10.1

186/s12859-021-04197-2

- Joslyn,

Cliff A; Aksoy, Sinan; Callahan, Tiffany J; Hunter, LE;

Jefferson, Brett; Praggastis, Brenda; Purvine, Emilie AH;

Tripodi, Ignacio J: (2021) "Hypernetwork Science: From

Multidimensional Networks to Computational Topology", in: Unifying Themes in Complex

systems X: Proc. 10th Int. Conf. Complex Systems, ed. D. Braha et al., pp.

377-392, Springer, https://doi.org/10

.1007/978-3-030-67318-5_25,

[p/reprint]

- Brier, S\/oren

and Joslyn, CA: (2013) "Information

in Biosemiotics: Introduction to the Special Issue", Biosemiotics,

v. 6:1, pp. 1-7, https://doi.org/10.1007/s12304-012-9151-7,

[p/reprint]

- Brier, S\/oren and Joslyn, CA: (2013)

"What

Does It Take To Produce Interpretation? Informational,

Peircean, and Code-Semiotic Views on Biosemiotics", Biosemiotics,

v. 6:1, pp. 143-159, https://doi.org/10.1007/s12304-012-9153-5,

[p/reprint]

- Zhang, Guo-Qiang; Luo, Lingyun;

Ogbuji, Chime; Joslyn, CA; Mejino, Jose; Sahoo, Satya:

(2012) "An

Analysis

of Multi-type Relational Interactions in FMA Using Graph

Motifs", in: AMIA 2012 Symp. Proc., pp. 1060-1069,

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3540524/

- Kleese van Dam, Kerstin; Joslyn, Cliff

A; McCue, Lee Ann; Lansing, C; Guillen, Zoe; Corrigan,

Abbie; Cannon, William; Anderson, Gordon; Romine, Margaret:

(2010) "Final Evaluation Report for the Semantic

Driven Knowledge Discovery and Integration in the System Biology

Knowledgebase Projects", PNNL Technical Report PNNL-SA-75957,

[p/reprint]

- Joslyn, Cliff A; Baddeley, Bob; Blake,

Judith; Bult, C; Dolan, M; Riensche, R; Rodland, K and

Sanfilippo, A; White, A: (2009) "Automated

Annotation-Based Bio-Ontology Alignment with Structural

Validation", in: Proc. Int. Conf. on Biomedical

Ontology (ICBO 09), Nature Precedings, ed. Barry Smith,

75-78, https://doi.org/10.1038/npre.2009.3518.1

- Verspoor, KM; Cohn, JD; Mniszewski,

SM; and Joslyn, CA: (2006) "A Categorization

Approach to Automated Ontological Function Annotation", Protein

Science, v. 15:6, pp. 1544-1549, https://doi.org/10.1110/ps.062184006

- Joslyn, Cliff A; Gessler, DDG;

Schmidt, SE; and Verspoor, KM: (2006) "Distributed

Representations of Bio-Ontologies for Semantic Web Services",

in: Joint BioLINK and 9th Bio-Ontologies Meeting (JBB 06),

[p/reprint]

- Verspoor, KM; Joslyn, CA; Ambrosiano,

JA; B\"acker, A; Bodenreider, O; Hirschman, L; Karp, P;

Kelly, H; Loranger, S; Musen, M; Sriram, R; , C Wroe: (2005)

"Knowledge

Integration

for Biothreat Response", Los Alamos Technical Report

LAUR = 05-0907, http

s://www.lhncbc.nlm.nih.gov/system/files/pub2005035.pdf

- Verspoor, KM; Cohn, J; Mniszewski, S;

and Joslyn, CA: (2005) "Nearest Neighbor

Categorization for Function Prediction", in: Proc. 5th

Community Wide Experiment on the Critical Assessment of

Techniques for Protein Structure Prediction (CASP 05)

- Verspoor, KM; Cohn, J; Joslyn, CA;

Mniszewski, SM; Rechtsteiner, Andreas; Rocha, Luis M; Simas,

Tiago: (2005) "Protein

Annotation as Term Categorization in the Gene Ontology Using

Word Proximity Networks", in: BMC Bioinformatics,

v. 6:s1, https://dx.doi.org/10.1186%2F1471-2105-6-S1-S20

- Verspoor, KM; Cohn, JD; Mniszewski,

SM; and Joslyn, CA: (2005) "POSOLE:

Automated

Ontological Annotation for Function Prediction", in: Proc.

Automated Function Prediction SIG, ISMB 05, https://www.biofunctionprediction.org/afp_programs/afp-2005-p

rogram.pdf, [p/reprint]

- Joslyn, Cliff A; Cohen, Judith;

Verspoor, KM; and Mniszewski, SM: (2005) "Automating

Ontological Function Annotation: Towards a Common Methodological

Framework", in: Proc. Eighth Annual Bio-Ontologies Meeting,

ISMB 2005, Detroit, [p/reprint]

- Joslyn, Cliff A; Mniszewski, Susan;